In its report on the impact of generative AI sites on an organization’s security posture, Menlo Security found that between June 2023 and December 2023 there was a more than 100% increase in the number of visits to Gen AI sites (e.g., ChatGPT, Google Gemini, Microsoft Bing, Perplexity, Grammarly, Quillbot, etc.) as well as a 64% increase in users. “Because of hybrid work the browser is now the first line of defense like the firewall used to be,” said Andrew Harding, VP Security Strategy at Menlo Security.

Generative AI (Gen AI), the technology megatrend that kicked off in late 2022 and shows no signs of abating, is now integrated into the applications knowledge workers use every day – e.g., unified communications and collaboration (UC&C), contact center (and CCaaS), CRM, and enterprise workflow. Before Gen AI was in those apps, it was freely available via the ubiquitous Internet browser – and it still is, even though many Gen AI sites now offer premium plans that offer more usage. Similarly, the UCaaS, apps with Gen AI are also for paid users.

But the point is this: Gen AI is everywhere and easy to access. Moreover, there is an incentive for knowledge workers to use those tools because mounting evidence suggests that Gen AI does indeed help knowledge workers “automate away” some aspects of their work. According to J. P. Gownder, vice president, principal analyst with Forrester, “It is possible to measure the gains associated with Gen AI. It doesn’t take very long to generate a return on that investment, but that isn’t a true ROI because it’s not balancing against dollars spent. At this point Gen AI is more about removing the drudgery associated with knowledge work.” And by knowledge work, Gownder is referring to the work product of the approximately 90 million U.S. workers who “sit behind desks” for the majority of their workday – those in business and financial operations occupations, management, computer and mathematical jobs, legal occupations, office and administrative support roles, etc. (Forrester uses the occupation data and categories provided by the U.S. Bureau of Labor Statistics for its knowledge worker estimates.)

So if the benefit of Gen AI tools are there – and only a quick copy/paste or file upload away – then many knowledge workers will use them. And that means “we have to think about what it means to defend the browser and do that without impinging on productivity and in a way that enables folks to use new tools securely,” Harding said.

Just Browsing, Thanks

Rik Turner, Senior Principal Analyst with Omdia, would agree. Writing in his report Developments in Browser Security: From Isolation to Enterprise Browsers, Turner said that the browser “is the tool that an organization’s employees use to ‘see out,’ not only onto the [Web] but also, and increasingly, into the applications that they use to do their jobs.” This includes software as a service (SaaS) applications, as well as private apps “that are housed in infrastructure- or platform-as-a-service (IaaS or PaaS) environments and developed by their employer to enable specific functionality relevant to its business.”

If a threat actor can compromise the browser, they can potentially hack into what Turner calls the “brain and accumulated knowledge of an organization.” He cited a laundry list of attacks against browsers, from man-in-the-browser (MitB) attacks, which act like a Trojan horse and learn about users’ Web destinations, boy-in-the-browser (BitB) attack, a type of malware, to SQL injections and malicious browser plug-ins. Other exploits go after web applications or web sites and thus use the browser to deliver a malicious payload.

Menlo’s Harding cited an example attack in 2023 when a proxy pretending to be ChatGPT duped users into divulging personally identifiable information (PII). Similarly, Turner mentioned Samsung’s ban on the use of generative AI tools due to a leak of its intellectual property (IP) after someone copy/pasted that information into ChatGPT.

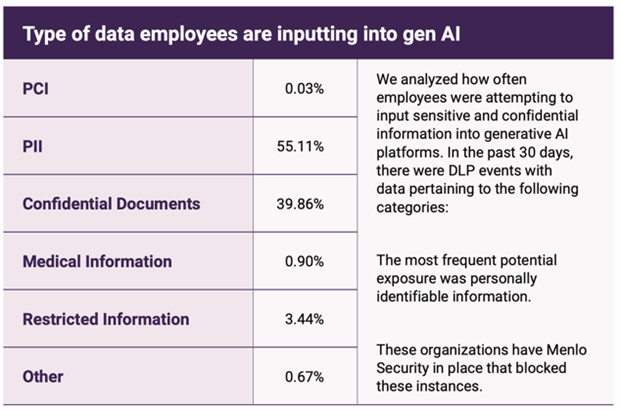

And while Menlo’s report found a 6% decrease in copy/paste events, there’s been an 80% increase in file uploads largely thanks to Gen AI sites making that capability available. As the following table shows, the information most frequently entered into Gen AI sites was PII followed by confidential documents. Clearly, these activities could result in data loss or data exfiltration (e.g., identity theft, credit card fraud, corporate IP, etc.).

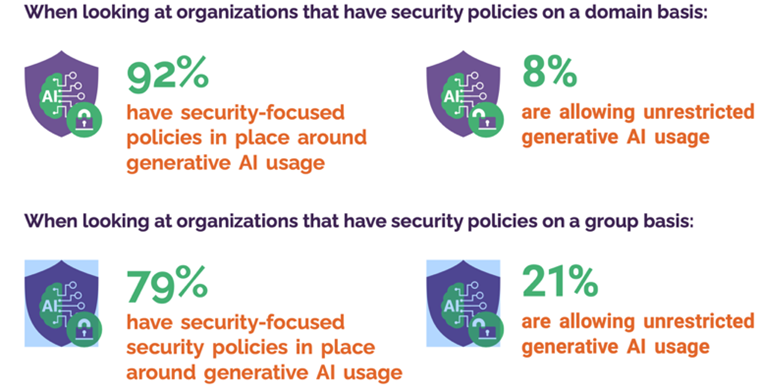

Menlo’s studies found a 26% increase in the occurrence of security policies governing the use of Gen AI sites between its June 2023 and December 2023 studies (Menlo surveyed the same 500 companies in December 2023 as it did in June 2023).

The data loss events noted above occurred “due to an increase in PII and confidential document upload attempts, despite more controls being put in place – and Menlo was able to block these attempts,” Harding said.

As the following graphic shows, most of the surveyed organizations have security policies in place either at the domain (specific sites) or group level. Groups refers to applying controls to all sites deemed to be a Gen AI tool. “The grouping approach delegates management of the categorization to the secure cloud browsing policy engine and helps enterprises get ahead of applying controls without knowing every tool that might be in use,” Harding said.

Menlo’s report recommends the implementation of policy management on a generative AI group level to help protect against a broader cross-section of generative AI sites. Harding also pitched the capabilities of his company’s products. “We can make sure that the tool that you intend to use is the tool you're using. We can also control what the browser is able to do. We can lock down copy/paste and file uploads and then we can mask what comes out of any Web-based tool.”

Masking refers to preventing sensitive information reaching a computing endpoint. “If someone’s working at home and is using, for example, a privately deployed tool with a Web interface, we could mask the sensitive information so while they still see it, it doesn’t land on their home machine,” Harding said.

Options for Securing the Browser

According to Omdia’s Turner, there are several ways to secure the browser – ways that apply to the entire Internet, not just Gen AI sites. Turner noted that the high profile data leaks cited above (and others) are an opportunity for browser security companies “to broaden the visibility of their products by saying ‘we can stop [it] because will know what site [employees go to] and we [can] impose restrictions on what can go into that site.”

Turner said there are three approaches to securing the browser: remote browser isolation (RBI), secure enterprise browsers and browser extensions. (There was a fourth, local browser isolation, which predated these three methods but the only company proposing that solution is now defunct.)

Remote Browser Isolation

Menlo Security started in the remote browser isolation (RBI) space. Based on recent news, Harding said that Menlo’s solution “transcends generation-one RBI with cloud-driven capabilities.”

Omdia’s Turner described RBI as “pushing pixels” so that any malicious data that might be embedded in the Web app can’t get to the laptop OS or BIOS. Once the user closes the session, there’s nothing on the laptop and any data in the cloud also gets cleared. In his report, Turner wrote that RBI is delivered “as a service.” It also tends to be bandwidth hungry and can be expensive because pixel pushing is resource intensive.

Enterprise Browser

An enterprise browser is Chromium-based and installed on a company’s computers. Chromium is an open source project developed and maintained by Google. Many browsers are based on Chromium, including Microsoft Edge, Google Chrome, Brave, Opera and more. (Firefox and Safari do not use Chromium.)

According to Turner’s report, enterprise browser solution providers add security-specific features to the base Chromium source code. By using an enterprise browser, a company has total control over its employees’ browsing. For example, the company could uninstall the pre-installed browsers on the computer or simply prevent their use. The company could allow the use of consumer-grade browsers but prevent them from being use with certain enterprise data sources (e.g., Salesforce CRM) or sites. Or they could require the enterprise browser to be used. An enterprise browser can also restrict the ability to cut/paste and upload files, which would mitigate the threats noted in Menlo’s report.

As Turner wrote in his report, one downside to the enterprise browser approach is that the company is paying for something they get for “free” with the purchase of the computer or with a download. But, for highly regulated industries or others with stringent data security requirements this expense might be worth it. Island, Talon (owned by Palo Alto Networks) and Surf Security all provide enterprise browser solutions.

Browser Extensions

The third option, browser extensions, are familiar to most because it’s the same technology underpinning the ad blockers, etc., many use every day in consumer-grade browsers – the standardized the Chromium framework. Per Turner’s report, several companies (Seraphic Security, LayerX, Xayn) offer browser extensions. This approach enables the injection of “lightweight JavaScript that enables the security and manageability that is missing from standard browser technology.”

According to Turner, this approach should help simplify deployment and prove less expensive overall though he also states that the extension approach means that the content is downloaded and rendered on the computer which could be problematic. Perhaps worse still are Google’s changes to Version 3 of its Manifest API. These changes, notes Turner, “will make life more difficult for the browser extension camp as a whole, if it does not render its technology outright inoperable.”

What Menlo’s Doing Now

According to Harding, Menlo offers a secure cloud browser that is running in the cloud on the user’s behalf. Without getting into the considerable technical details, the cloud-based browser interacts securely with the user’s local browser to redact and/or watermark what goes down to the local browser.

“By redacting, we can make sure the user only sees a subset of the information they need to do their work,” Harding said. “If they need [more], watermarking [might be] appropriate – with it we can mark documents including PDFs and web content so if someone does leak information accidentally or on purpose, we know who did it.”

Omdia’s Turner described it thusly: “Menlo is doing the heavy lifting of securing traffic to and from the browser up in the cloud and they are [sending] through only a subset of the traffic to the browser where there will also be policy being enforced by the extension.”

Harding said further that Menlo’s product can ensure that the tool employees intend to use is the tool they, in fact, use – which helps defeat the proxy attack cited earlier. Menlo’s product can also lock out the ability for a user to copy/paste into a web site or upload files.

Menlo can also provide visibility into the browser session. “We can show all the user inputs, all the responses and actually a timeline, a forensic record, of everything that happened in the session,” Harding said.

The Need for Training

Addressing the generative AI challenge of balancing productivity gains against security concerns, Harding said that “most users want to do the right thing; they're not trying to do the convenient thing. As these tools began supporting file uploads, we saw people say ‘let's see how that works’ or ask the tool to clean up meeting notes or copyedit a confidential specification for their next product.”

Those latter examples fall into the “should’ve known better” category, but these choices underscore the need for more training, education, and security tools to prevent mistakes – as well as deliberate data exfiltration attacks.

And as Gownder implied earlier, if Gen AI tools provide time savings to workers, then workers will be using those tools. But, he said, “companies are vastly underestimating the amount of training that’s required to get full benefit from a Gen AI tool. An hour of training just isn’t going to cut it.”

As Menlo’s report suggests, some of that training should be around security. Moreover, the proliferation of Gen AI tools, either downloaded to the desktop, or accessed through the browser, combined with the huge increase in knowledge workers accessing those tools points to a need for enterprises to control how their employees use those tools.

Allowing employees to work with the Gen AI tools with controls in place – like watermarking and redacting – can help prevent breaches from arising. As Gownder said, “We’ve found that those controls encourage people to not do the wrong thing and change the way they work. That goes a long way toward protecting against data leakage.”

Want to know more?

- For the December 2023 report, which is volume two of a study conducted in June 2023, Menlo Security analyzed generative AI interactions from 500 global organizations – APAC, Japan and the Americas comprised most of the sample. Sixty-one percent of the respondent firms were from companies with less than 1,500 employees. About 33.8% of the sample was from financial services companies, the next largest group was Insurance (9.7%), Government (8.1%), Services (7.1%) and Healthcare, Pharma & Biotech (6.17%).

- As mentioned in the article, Gen AI is being integrated into a host of applications and Windows 11. Protecting data at the application level is a different challenge to browser security. Kevin Kieller’s recent article touches on how data is protected in Microsoft Copilot.

- According to Omdia’s Turner, there's also the issue of protecting the AI itself, which is a bigger question that gets you into the whole issue of protecting the AI models from tampering and, protecting the training data for the AI from being poisoned.”

- Large language model (LLM) training data poisoning it a topic unto itself. For an introduction, see this article and this study.

- This Accenture report found that “40% of all working hours can be impacted by LLMs like GPT-4. This is because language tasks account for 62% of the total time employees work, and 65% of that time can be transformed into more productive activity through augmentation and automation.”

- This AWS survey found that “employers believe that AI could boost productivity by 47%, with large- sized organizations expecting the highest boost (49%). Employees, too, expect AI to boost their productivity, indicating that it will help them complete tasks 41% more efficiently.”

- This Harvard Business Review study, in conjunction with Boston Consulting Group, described the results of an experiment in how knowledge workers use generative AI. “Across 18 realistic business tasks, AI significantly increased performance and quality for every model specification, increasing speed by more than 25%, performance as rated by humans by more than 40%, and task completion by more than 12%.”