Automation Vs. AI-Assisted Humans: Where to Draw the Line

This session, part of the one-day Communications & Collaboration 2022 program, was moderated by contact center experts Sheila McGee-Smith, of McGee-Smith Analytics, and Nicolas de Kouchkovsky, of CaCube Consulting, with panelists Patrick Nguyen, of [24]7.ai; Abinash Tripathy, of Helpshift; Adi Toppol, of Jacada; and Lonnie Johnston, of NICE. Here are the AI-centric takeaways from this session:

- What AI technologies are ready for prime time? Which AI technologies to try or use depends on your organization’s maturity. If you’re already very digital and work with APIs, then a starting point could be bots, and using them should be relatively straightforward for you. That said, when you do use bots, think of them as interfaces to self-service applications. For those organizations that don’t yet have good API programming skills, robotic process automation (RPA) will be easier when used in conjunction with a contact center to provide some framework. Regardless, be selective with what you pilot. Carefully consider which AI technologies make sense for your company, given where it is.

- What’s left for the humans? Is there a long-term role for people? Humans will fill in the gaps AI leaves, and there will always be complex situations requiring the human touch. The 80-20 rule applies here: According to one of the panelists, 80% of contact center work can be automated, but the remaining 20% will be human-to-human interactions. What's left over after the AI does its work are the complex cases, and in recognition of this, some contact centers are removing the handle-time constraints for these interactions. As one panelist noted, "It is not a stopwatch exercise at that point." Also remember that humans are generating the data from which the AI learns. Today, the human handles the customer logs/recordings used as training sets for the AI.

- What are the best practices for automation anxiety? First off, you have to choose the right use cases and make sure you’re delivering value. Many companies push bots down the customer's throat; applying design thinking will result in more careful designs that don't annoy the customer. Generating and tagging data is critical, but efforts to automate tagging data fed into the AI learning set have been abysmal. Consider solutions that can leverage conversational models that don't require so much effort to keep up to date.

- Identify priority projects and keep them simple. Wrap an AI project into a straightforward pilot or proof of concept – and make it data-driven. Look at your existing agent chats and conversations to determine what to automate, for example.

- How to address trust with consumers? Don’t fake a bot as a human interaction – customers will notice because bots and AI in general are very poor at empathy. You also have to be aware of relevant laws. In some states, you must tell people they’re speaking with a bot. Likewise, you need to let people know when they’ve transitioned from bot to a human.

What It Really Takes to Put AI to Work in Your Contact Center

I billed this session, which I moderated, as technically intermediate, and the panelists didn’t disappoint. Callan Schebella, of Inference Solutions; Jeff Gallino, of CallMiner; and Adam Champy, of Google, shared deep insights into how organizations can successfully put AI to work in their contact centers. Attendees learned:

- The barriers to using AI are falling. For example, doing speech translation and NLP used to be difficult and only very large organizations could afford it. Now, with modern tools and platforms, even small and medium-sized businesses can use it. At the time, deployment has shrunk from months to hours or days.

- Bots don’t work very well in proprietary domains and when customers get emotional. The reasons they don’t work well in proprietary domains is that the vendors have trained off-the-shelf speech-to-text and NLP engines using “everyone’s data.” This means they won’t work well recognizing specific terms, phrases, or product names or concepts. For proprietary domains, you’ll need to plan on training the speech and NLP platforms you use. As noted above, bots and empathy don’t mix very well. In fact, having a bot say, “I understand you’re unhappy,” can even make people angrier or more frustrated than they had been when beginning the interaction. They recognize the company is being disingenuous; they know a bot is a program that can’t really understand emotion. Bear in mind, too, that anger detection doesn’t equate to understanding, and an intelligent agent should simply pass the transcript of the conversation and the anger sentiment to a live agent.

- Bots have become simple to build, but that has led to many poor designs and deployment challenges. The user interface, including voice cadence and inflection, are very important, yet bot developers tend to be myopic with respect to their own products or services. They need to get real-world data on how customers will speak and act and use this data to train their bots accordingly. They must also remember that bots aren’t IVR: Deployment isn’t the end. Bots need continuous updating and the organization needs to be prepared to evaluate performance continuously and make tweaks or adjustments to insure adequate bot performance.

- When you use bots, you must be sure the customer can make progress toward problem resolution. The last thing you want is the “perfect voice” interface but a frustrated user. In other words, don’t lock your users inside a bot. Make an easy escape route for them. And here’s another tip: Avoid creeping users out by giving too many hints as to how much the bot knows about them.

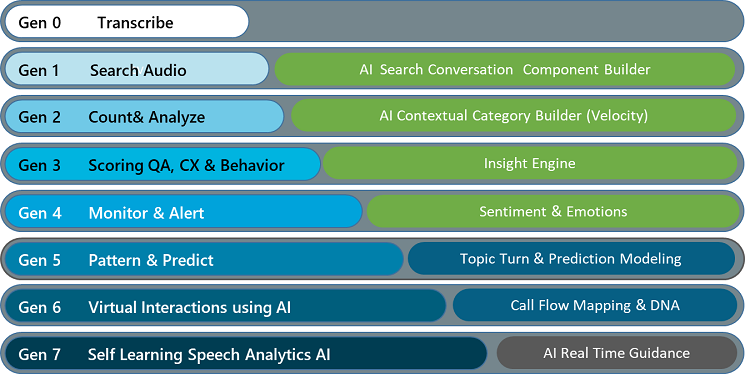

- Avoid tools that don’t have sufficient experience and depth. It seems that some new AI tool or company appears almost weekly, but you’ll usually find many lack some core component you’ll ultimately need. Consider this CallMiner graphic below; as it shows, we’re in generation seven of speech analytics tools, but the foundational capabilities are essential at levels five or six, too -- and without them an AI project will be difficult or impossible to accomplish successfully.

- Integration in the workflow is critical. Building a standalone bot isn’t likely going to generate much success. To be powerful, bots must be accurate in determining “intent” and must integrate well with the tools in the workflow.

- To get started, identify how AI might solve a well-scoped and meaningful problem, and then put your team in place. You’ll need to connect to critical backends and build for scalability. And, lastly, enable the organization for your new, automated processes.

Click below to continue to next page: Building One Chatbot Across All Your Channels, and more