The Voice & AI show occurred September 6-7, 2023, in Washington, DC; No Jitter (NJ) is attending some of the conference sessions virtually. Raj Koneru, CEO and Founder of Kore.ai, held the session “How Generative and Conversational AI is Transforming Everyday Experiences” to define how generative AI and conversational AI can work together to inform and refine responsive, customer-facing chatbots.

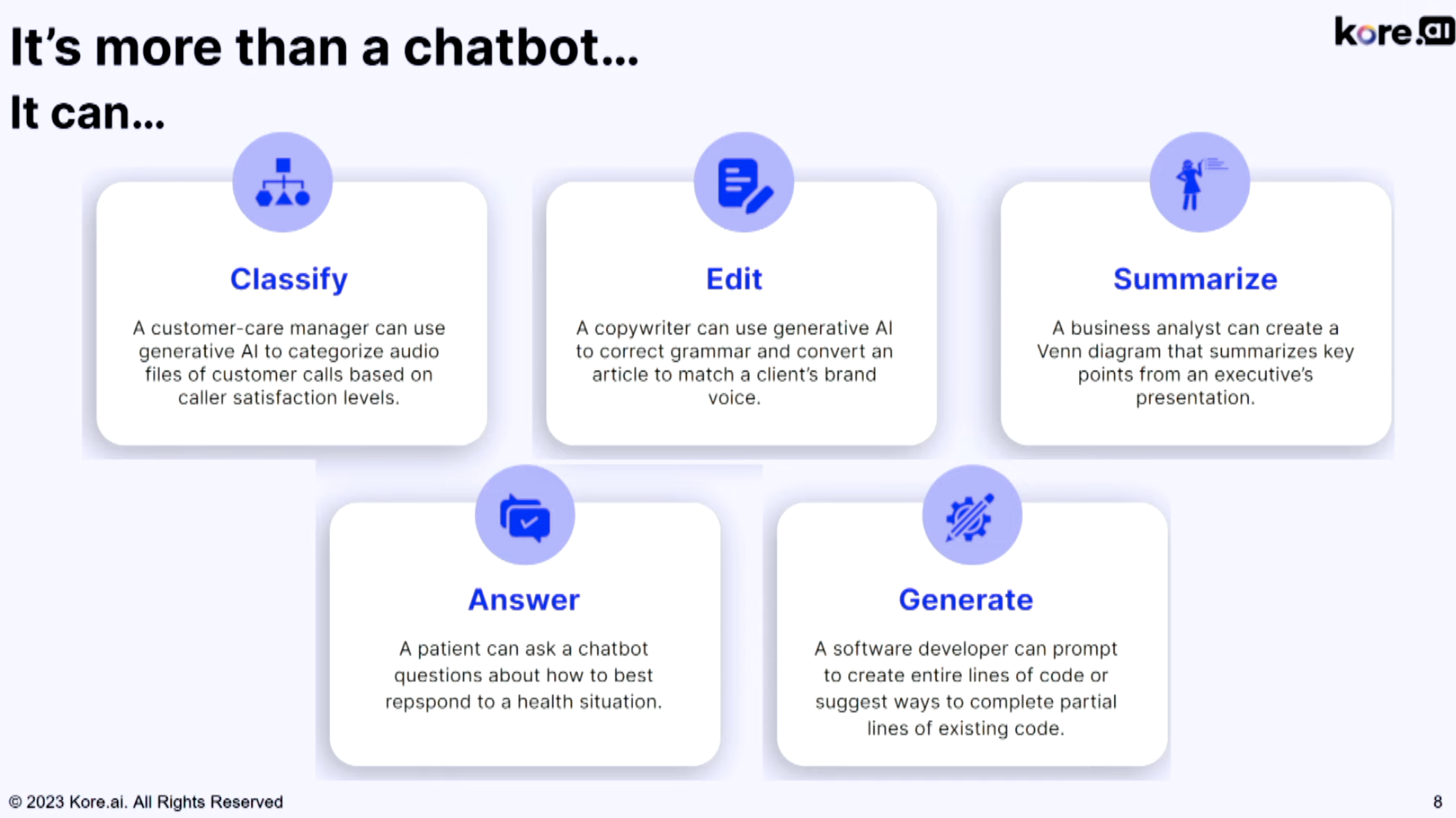

Generative AI does five things well – classify, edit, summarize, answer, and generate – as Koneru’s slide (below) illustrates. This functionality has been covered by NJ in multiple articles – from exploring how those capabilities can be used in contact centers, to “assistant” products as with Microsoft’s Copilot, Zoom’s AI Companion, Google’s Duet and a host of others.

Because these generative capabilities produce human-like interactions, they’re likely to be the biggest game-changers in roles that require informational inquiry and resolution. And yet, the technology comes with unique challenges. “It can answer questions and most of the time you think it's the right answer, until you realize it's the wrong answer,” Koneru said.

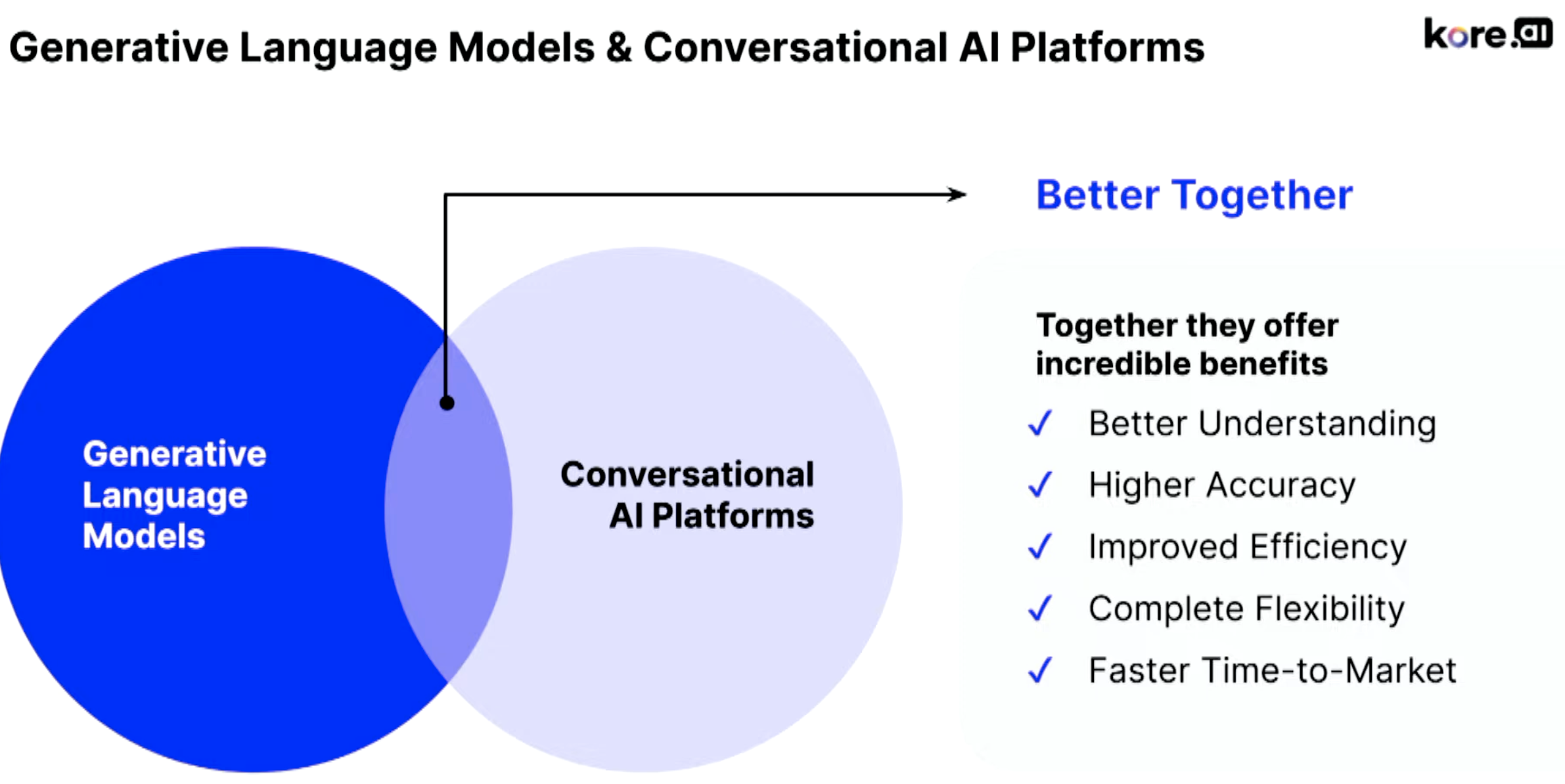

To shore up the possibility that large language models (LLMs) may generate inaccurate, wrong or even fabricated information, Koneru argues that generative AI models and conversational AI platforms (which is what Kore.ai provides to many large companies) are “better together” as illustrated in the following slide.

Other companies have expressed similar positions. In his interview with NJ, Phillip Heltewig of Cognigy sketched out his perspective that one key use case for generative AI is in “helping [conversational flow] editors” and that “you need a conversational AI platform to make use of generative AI because otherwise you have no control of what goes in and what goes out.” (Cognigy also provides a “conversational AI” platform.)

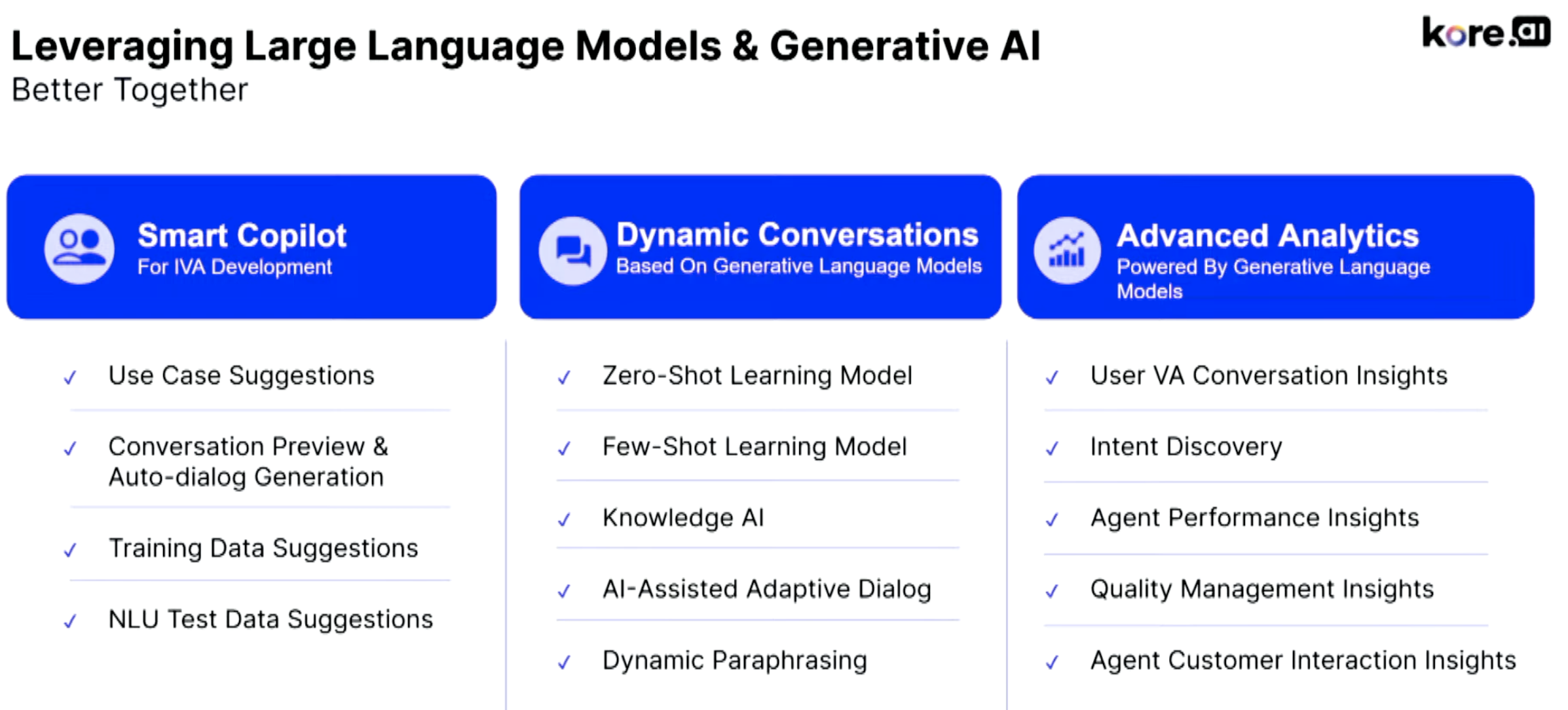

Koneru went on to describe several solutions Kore.ai has produced within the “better together” overlap shown above. One of their products, Smart Copilot, helps companies develop intelligent virtual agents (IVAs) which are typically deployed in front of the contact center, as Frank Fawzi of IntelliPeer discussed in his interview with NJ. (Note: Virtual Agents are a key arrow in IntelliPeer’s product quiver.)

As Koneru described, his company’s customers would provide Smart Copilot with a description of the business process – check account balance, transfer money, bill pay, etc. Smart Copilot will then generate example dialogues that imitate a chatbot interacting with a user. “That way you don't have to start from scratch – you can use what it generates and then modify that or pick the one that's closest to how your business functions and modify it.”

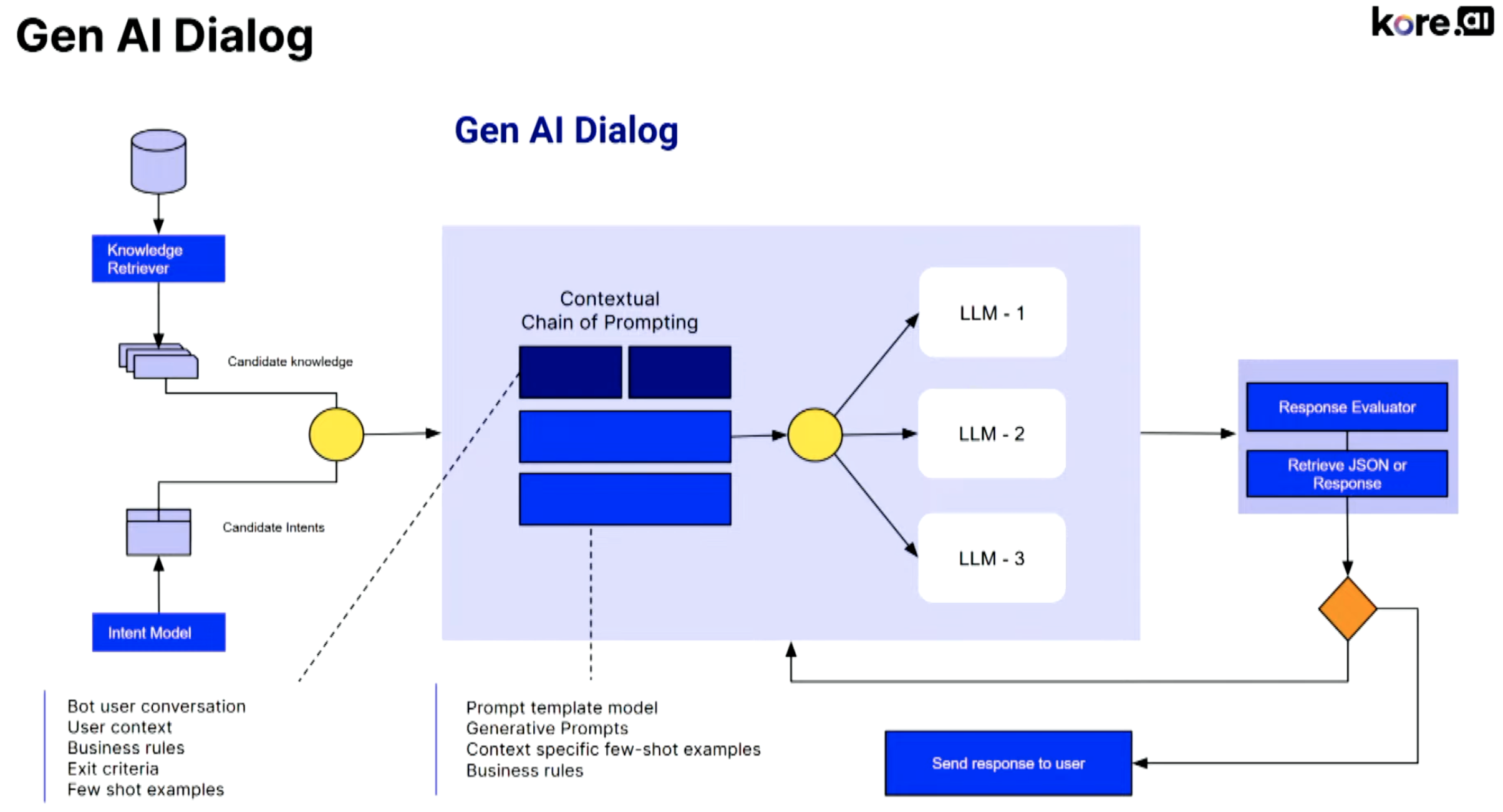

The next step for Kore.ai was to create dynamic conversations based on generative AI that can be used to generate dialogues. This uses the Knowledge AI framework (listed in the previous slide) along with the intents and entities the customer wants to collect, the business rules that must be adhered to and then the exit scenarios for the conversation flow as illustrated in the following slide.

According to Koneru, the prompt templates are automatically generated within the platform and the Kore.ai Experience Optimization (XO) platform uses the “chain of prompting” technique to generate the prompts so that “no one is writing prompts.” This site provides a good overview of what context or chain of thought prompting is – essentially it means designing a series of prompts that build on each other.)

Koneru said that the platform uses multiple LLMs to “identify intents, extract the entities and then generate the answer.” He then launched a recorded demo to illustrate this process. In the demo, the bot spoke almost naturally when speaking with the customer in that it sounded somewhat robotic and that some of what it said sounded “off” – e.g., the “great choice” and the “sure thing” responses in dialog below. But these are minor points and the goal was to show that the bot wasn’t programmed; the platform, powered by an LLM, generated the dialog responses on the fly.

Bot: Tony’s Pizza. How can I help you today?

Customer: Hi, I would like to place an order for three pizzas.

Bot: I'm glad to help you with that. Great choice. Three pizzas coming right up. What size of pizzas would you like to order?

Customer: I have four guests coming tonight. What size would you recommend?

Bot: Sure thing. Three medium pizzas would be perfect for four people. Would you like to go with thin crust? thick crust or cheese crust base?

Customer: Um, not sure. What did I order last time?

Bot: Let me check that for you. I see that your last order on third of March was for two small thin crust pizzas with red onions, bell peppers and black olives. Would you like to go with thin crust again?

Customer: Yeah, for two of them. And for the third one I would like a large pizza with cheese sticks.

Bot: Perfect. So that's two medium thin crust pizzas and one large cheese crust pizza. What toppings would you like on your pizzas?

Customer: Let's add all the toppings from my last order. And also add mushrooms to one of the medium pizzas.

Bot: I got it. Just to let you know we only allow up to three toppings for medium pizzas. Shall I add mushrooms to the large pizza instead?

Customer: Yeah, that works for me.

Bot: Great. So your order will be two mediums and crust pizzas with red onions, bell peppers and black olives. And one large cheese crust pizza with red onions, bell peppers, black olives, and mushrooms. Would you like to confirm your order?

The dialog continued a bit longer and went into the ordering minutiae, as well, but the above is sufficient to illustrate how the Kore.ai platform “generated a dynamic dialogue which is controlled, stays within its parameters and produces an output which is very human like,” Koneru said.

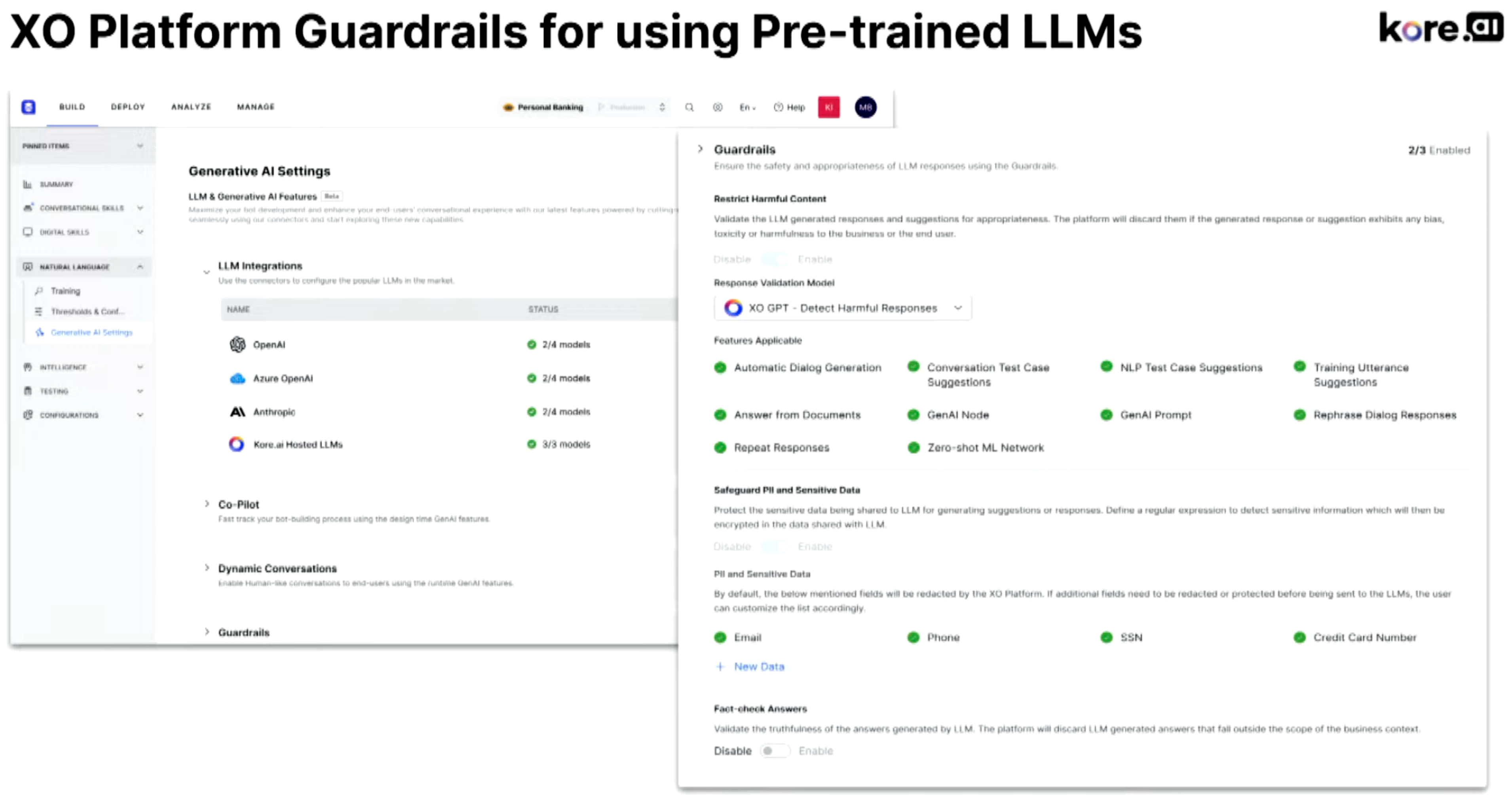

He then went on to discuss how the terms “responsible” and “guardrails” – which typically arise when anyone is talking about the use of generative AI – are implemented in the Kore.ai XO platform (see below). Essentially, the XO platform allows users to control what gets done and what doesn't get done with each of the limitations shown below.

For example, the XO platform can integrate with multiple LLMs (shown in the lefthand panel) while the guardrails are shown on the right. Those include detecting harmful responses, fact-checking the answers generated by the LLMs and redacting or obscuring personal identifiable information (PII) before being sent to the LLMs. Guardrails can also include a company’s specific business processes or rules. So, in the pizza demo above, the pizza company might had a rule that prohibited ordering more than five pizzas. That rule would become a “guardrail” – a constraint – and that would get passed to the LLM.

Note that while each guardrail is defined, it is not clear from these slides, nor did Koneru state in this presentation, what happens after the platform detects and discards a “harmful response.” In a different session at Voice & AI (The Power of Generative and Conversational AI for Large-Scale Success), however, Koneru said that Kore.ai is putting in place a validation framework so that if the LLM generates an unexpected response the platform will detect that violation of the guardrails and then ask the same LLM, or another, to regenerate the response until it passes the validation.

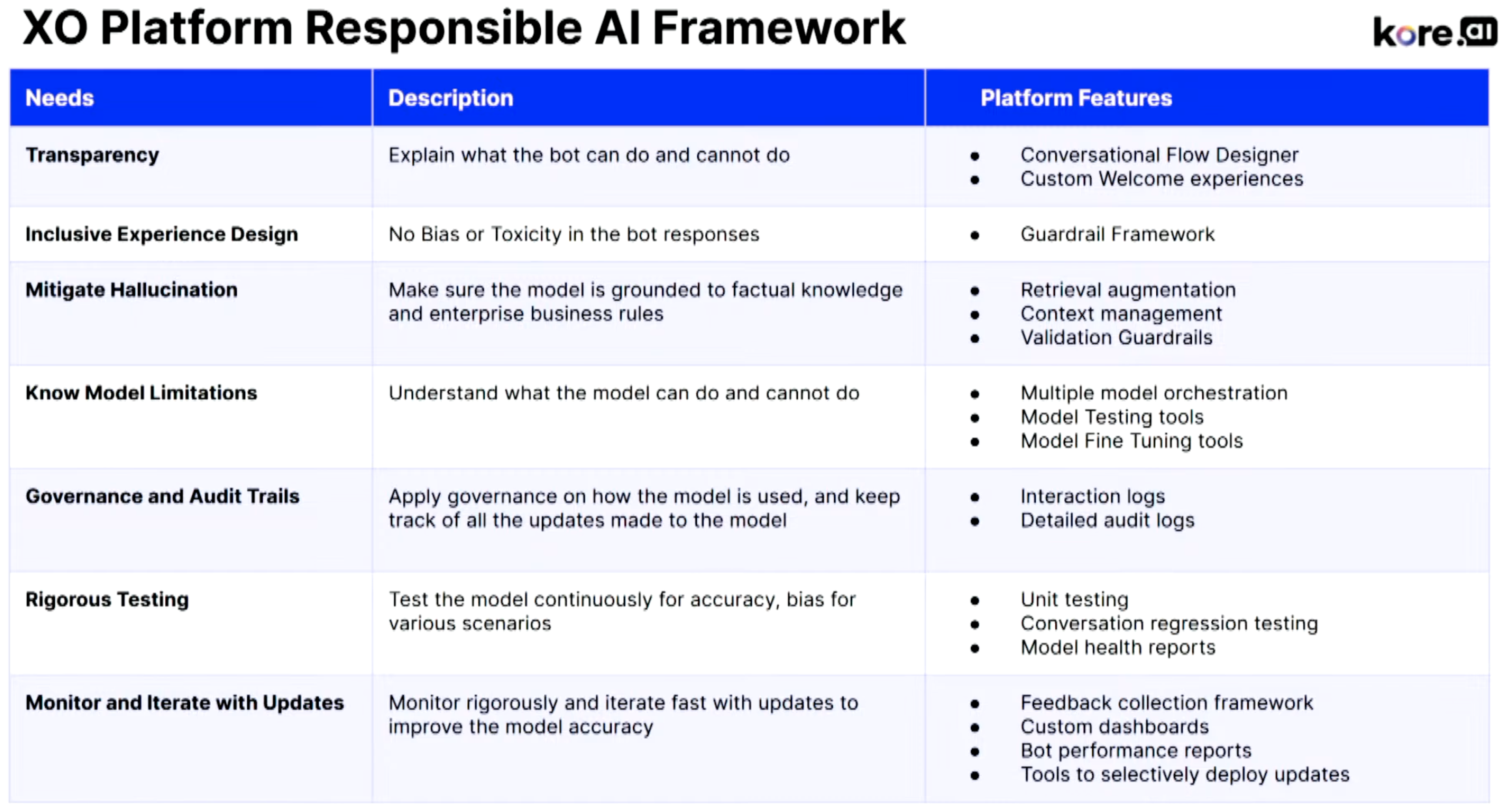

This next slide shows how the individual features of Kore.ai’s platform solve for the security and responsibility need. “These are the choices we made within the platform to achieve responsible AI,” Koneru said. “Everybody talks about responsible AI, but you need to be able to implement and that implementation is the responsibility of the platform.”

Koneru referred to the capability of Kore.ai’s platform, and the industry in general, to use LLMs to dynamically generate dialogues as the “holy grail” which many in the industry are trying to accomplish. Interestingly, Cognigy’s Heltewig in his Q&A with NJ used the same phrase to describe that same goal.

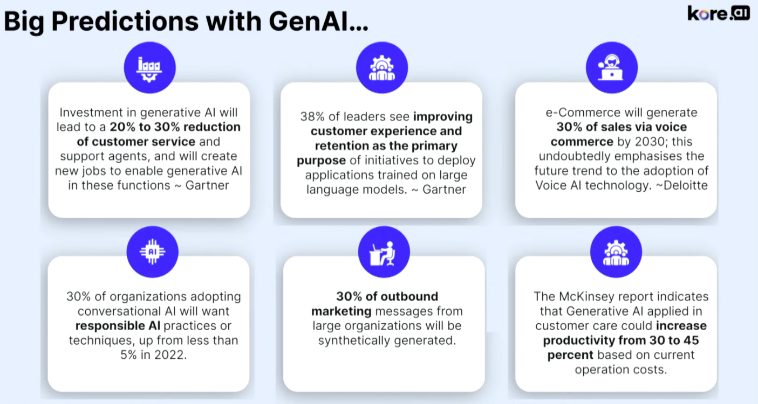

Koneru began his presentations with some cited predictions about generative AI, as shown in the following graphic. But, it seems more appropriate to end with them – 20 to 30 percent reduction of customer service and support agents being key among those predictions, not to mention the 30% of synthetic outbound marketing messages, the 30% increase in e-commerce sales, and the potential increase in productivity.

How realistic are these predictions? That’s anyone’s guess, but perhaps this overview of what Kore.ai’s platform can do today sheds some light on what may be possible tomorrow.