Today at WebexOne, Cisco announced several components of its AI strategy – Webex AI Assistant, Real-Time Media Models (RMMs) and the Webex AI Codec – which the company will be making “pervasive” across its portfolio. No Jitter spoke with Javed Khan, SVP and GM of Cisco Collaboration about the announcements.

Webex AI Assistant

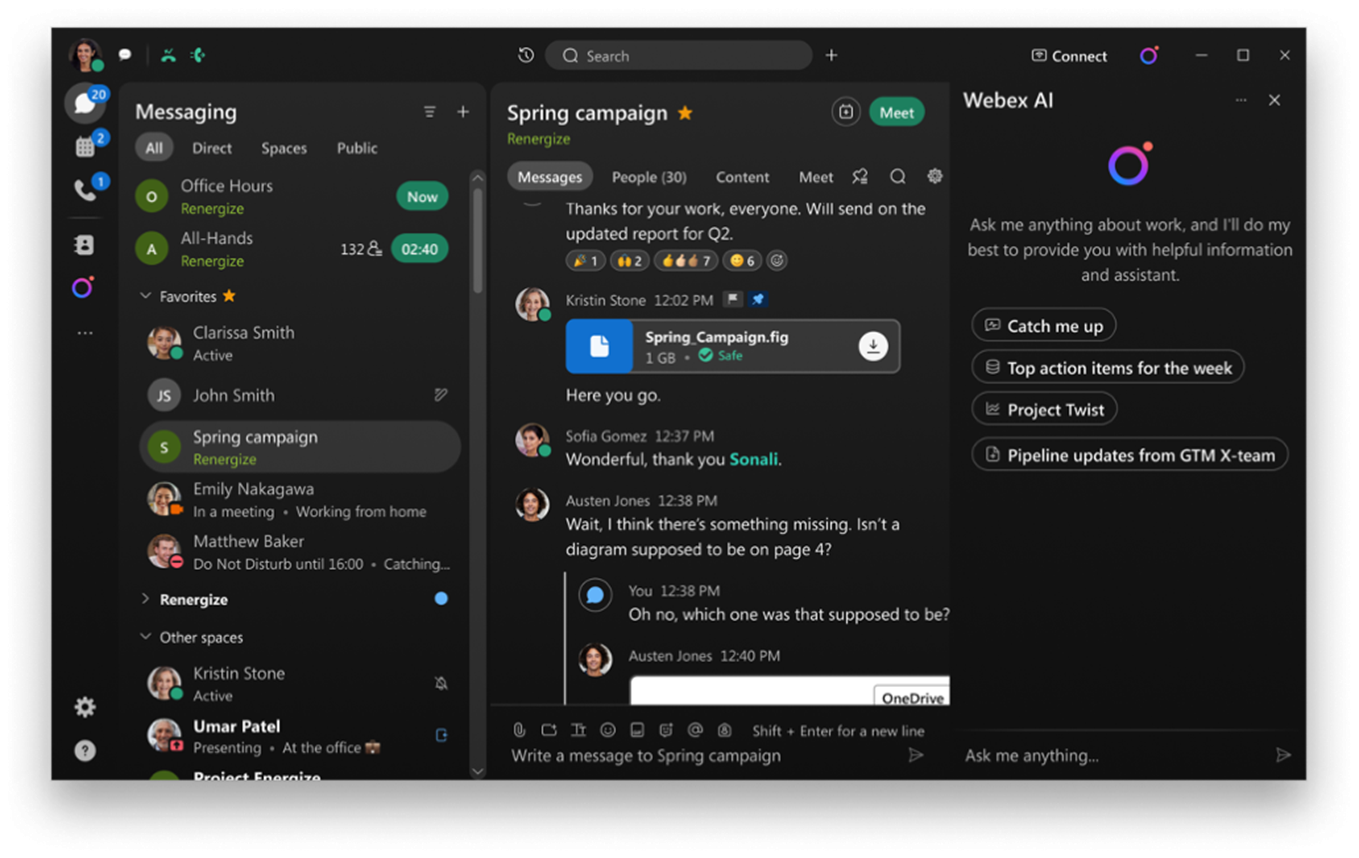

The new Webex AI Assistant provides generative AI-powered functionality such as recommended changes to tone, format, and phrasing within Webex Messaging and Slido. The Assistant will also suggest responses to messages, and summaries of messages, meetings and Slido topics. (Slido is a Q&A and polling platform acquired by Cisco in 2021.) The following graphic shows the Webex AI Assistant in action.

Including this functionality brings Cisco Webex to parity with the other major unified communications and collaboration (UC&C) players who all have variations on generative AI-based summarizations and recommendations.

With respect to the large language models (LLMs) powering the AI Assistant, Khan said that Cisco has taken a federated approach. “We expect significant innovation and maturity in terms of cost, capabilities, and specialization in the space. We will use the best language model for that particular use case which implies multiple models, a combination of commercial models, [open source models] – and some we’ll home grow for our own use cases, especially on the RMM side.”

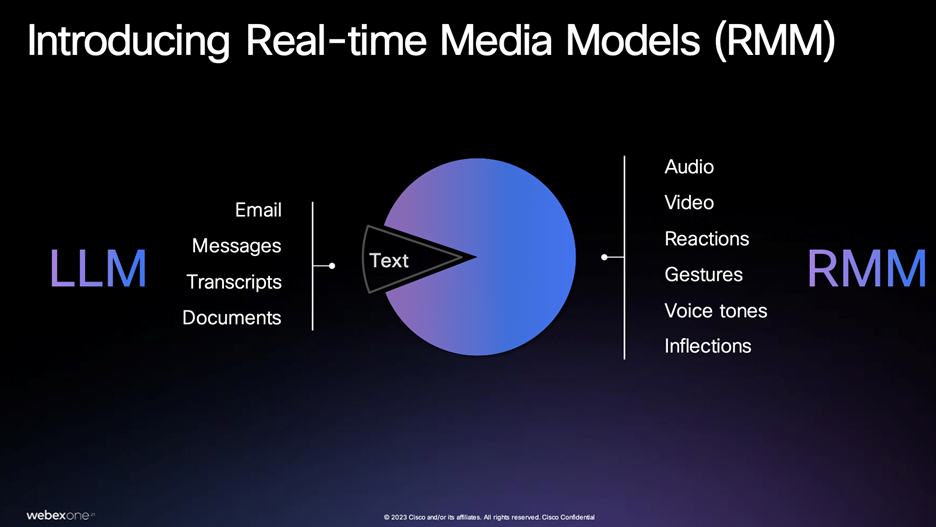

Real-Time Media Models (RMMs)

The RMMs are capable of enhancing audio and video quality (in conjunction with the Webex AI Codec) and can recognize people, objects, and actions such as movements and gestures. Khan said that the new RMMs are “capable of understanding audio and video signals – reactions, gestures, expressions – so when I say something with an elevated voice or I say something softly or nod, or give a thumbs up or thumbs down, it all may mean slightly different things even if [what I say or write] is the same. We feel that all of that can better inform the AI.” In other words, the RMMs are using body language and vocal tone to add more context to communication.

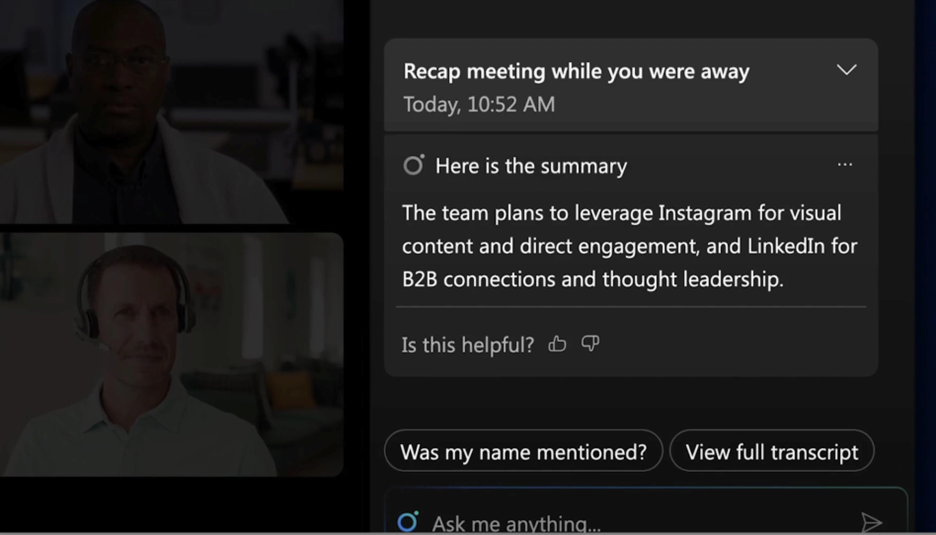

What Cisco Webex intends to do appears to involve combining both LLMs and the RMMs to derive additional insights and/or trigger actions. For example, Webex will soon be able to recognize when a user steps away (so, data for the RMM) and then capture meeting notes and/or a summary of what happened (an increasingly common use case for LLMs) – cued to when that user returns (see below). Khan said that “walk away and return” feature is currently in beta and they expect it to be included by the end of 2023 or in 2024.

Responsible AI, privacy concerns, etc., are all interwoven with the (generative) AI discussion and become even more pressing when the AI is not just capturing notes and summaries, but also end users’ facial expressions, gestures, and presence.

“We don’t train on customer data,” Khan said, “and all of this you can opt in at the administrator level – they can decide they don't want this feature – and at the end user level. So there are two levels of controls.”

Khan specified that Webex does not record or train on the fact that the user walked away. “AI has to deliver enough value that customers find that useful while we also address their privacy and persistence of data concerns.”

(So nobody has to worry that Webex is going to tell your boss when you had to get up for something mid-meeting.)

Next on the RMMs feature list is gestures – detecting when someone is clapping or making a thumbs up/down – as well as tone inflection which, simply put, detects increasing/decreasing decibel levels.

Webex AI Codec

According to Khan, one of the big pieces of innovation that allows Cisco to do generative AI on the audio side is a new, homegrown, audio codec that uses machine learning to compress audio so that “we can maintain very high fidelity in very low bandwidth conditions,” Khan said. “Because of its low network footprint, the codec allows the application it is powering to send redundant packets, retransmit lost packets and predict missed packets.” And per the release, Webex AI Codec also has built-in speech enhancement functions like noise removal, de-reverberation and bandwidth extension.

Khan said that the codec will eventually become a part of all Cisco’s technology, but they’re starting with calling use cases. “Early next year, one-on-one phone calls will first get the codec, and then over time, meetings and the contact center, and then our devices,” he said.

Want to know more?

Stay tuned: Over the next several days NJ will cover the news around WebexOne.