In my

previous Decoding Dialogflow article, I discussed intents and provided guidance about how to create intents so that a customer service virtual agent can understand what the person using the bot wants. Understanding the user’s intent is only part of the chatbot problem, however.

Equally important is extracting relevant information from a user’s input; this information includes dates, addresses, account numbers, times, amounts, and such. This extraction process is known as entity recognition. Entity recognition is Dialogflow’s mechanism for identifying and extracting useful data from what the user says or types. Entities add specificity to a user’s intent.

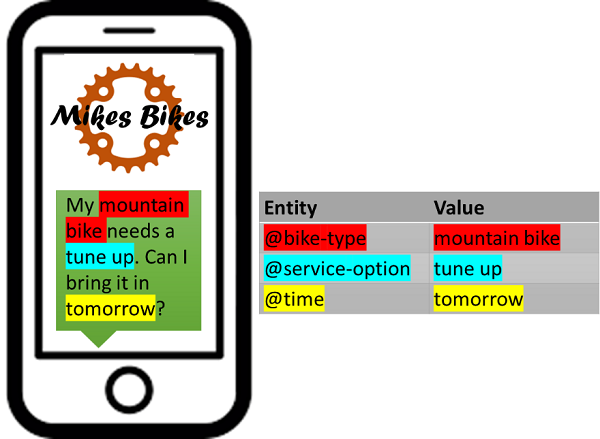

Conceptually, think of entities as objects or facts that are important to a conversation. Consider the following snippet of conversation in a cycling repair shop bot.

In this example, the end-user customer types in a request for service and the bot is supposed to schedule an appointment. When programming this agent in Dialogflow, the developer identifies the different entities that are important to a cycling service application. In the above conversation snippet, the entities are the bike-type, the service-option, and the time. These values make the conversation specific enough so that the bot could schedule a service appointment and reply to the customer telling her when to bring the bike in.

You might hear some people use the term “slot” instead of “entity.” Slots and entities are the same thing, but Google uses the term “entity” in its Dialogflow documentation.

Three Types of Entities

Dialogflow supports three types of entities: system entities, developer entities, and user or session entities. As shown in the example above, Dialogflow uses a preceding “@” sign in the name to identify a word or short phrase as an entity.

System Entities

Dialogflow has a number of built-in objects, or system entities, that it can distinguish and understand. Using these built-in system entities can significantly shorten development because they don’t require additional programming.

System entities include many things commonly referenced by customers: time, date, numbers, speed, length, weight, percentage, temperature, currency, volume, addresses, ZIP codes, geographies, names, URLs, color. The @ sign and the word “sys” proceeds these entities in a bot program, as follows:

Using @sys entities means customers don’t need to input common information. A simple example is weather. Without the @sys.geo entities, a weather bot developer would need to program in every city and every state or province in the country where the bot is supposed to work. Because Dialogflow already know cities, towns, states, provinces, and countries, developers don’t need to program these elements into their applications. The same is true for most names, places, numbers, currencies, airport codes, phone numbers, etc.; Dialogflow can identify many of these with no programming required. Thus, if the customer said, “I live in Salt Lake City and my mountain bike needs a tune up tomorrow,” the bot would identify Salt Lake City as a @sys.geo-city entity, tune up as a @service-option entity, and mountain bike the @bike-type entity.

Developer Entities

Developer entities are application-specific entities created by the bot creator. In the bike example above, the developer created the @bike-type and @service-option entities. In a banking application, developer entities might include information like @account-number and @withdrawal-amount. A customer service “order status” bot application would have entities like @order-number and @ship-to-zipcode.

The point is, developers rely on this type of entity to support a specific application, using any naming convention they choose.

For large applications with many different entities involved, developers might upload all the various entities by importing a CSV file or using JSON code to set up the entities.

Dialogflow provides a mechanism that lets developers use synonyms to identify an entity. In the bike shop example, a customer might say “tune up,” “service,” “tune,” “maintenance,” or “adjustment.” Developers must enter all synonyms into Dialogflow so the system is able to correlate a word or phrase with an entity.

Session Entities

The third type of entity, a session entity, ties to a customer and a session. For example, a bot application may need a customer’s name and Social Security number or perhaps a list of previous orders; these are pieces of data relevant to a particular customer during a particular bot interaction session.

Session entities are created programmatically using Dialogflow APIs; these entities time out by default in 10 minutes. This means that if the customer hasn’t interacted with the bot for 10 minutes, the system “forgets” the values of the session entities.

Requiring and Combining Entities

Dialogflow has mechanisms that help bot builders use entities in conversations and responses. One involves making entities required and another provides the ability to combine certain entities.

Required Entities

A bot developer can mark any of the three entity types as “required.” When required entities are in use, the customer must specify values for them. If the customer doesn’t specify values for required entities, the bot can’t fulfill the customer’s intent. For example, if a customer wants to transfer money between accounts, required entities would be the @from-account, the @to-account, and the @transfer-amount. Without these three pieces of information, transfers between an individual’s bank accounts can’t proceed.

The bot developer can program multiple ways to ask the user for the required information. Dialogflow will loop through these different ways of extracting information, interacting with the customer until it’s either received the required information or sort of times out and gives up. In the latter case, the conversation becomes about a fallback intent.

Composite Entities

On occasion, combining entities may be helpful. For example, a developer building a bot for pizza ordering may want to combine toppings into a single “combined entity,” such as @toppings-list. A music playlist is another example of a combined entity. Google has also defined several combinations for system entities; for example, an @sys.date-time system entity combines the @sys.date and @sys.time entitites; @sys.place-attraction combines location and event system entities, which can hold things like the location and name of a theme park — Orlando, Florida, USA, and Disney World, for example.

Intents or Entities First?

Although bot builders will likely identify intents and entities simultaneously, they’ll need to input entities into Dialogflow before training a virtual agent with training phrases. This is so that the machine learning engine can properly identify the entities when it examines the training phrases.

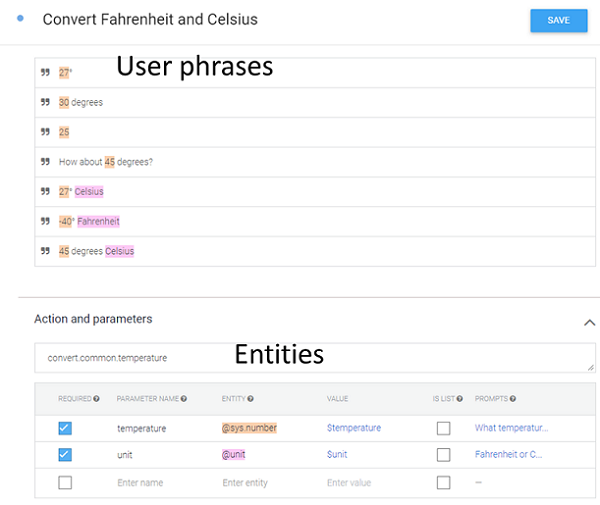

When entering user intent phrases, Dialogflow immediately tries to identify words or strings of words, numbers, or names as entities. The system highlights entities found in the customer phrase with different colors to show which type of entity it has identified. This process is called “annotating.”

The purpose of the bot shown below is to convert between Celsius and Fahrenheit temperature scales. Note the words in the user phrases at the top identified and annotated with colors that correlate with the corresponding entities below.

Entity Creation Best Practices

- Use system entities when possible to ease the effort required to program a virtual agent.

- When creating training phrases, review each phrase carefully to make sure Dialogflow is correctly recognizing and annotating the entities.

- When you tell Dialogflow which words or phrases belong to a particular entity, be consistent in how you identify them. For example, if you’re using time in training phrases, and you have phrases like “Set the alarm for 6:00 a.m.” or “Wake me up at 7:00 a.m.,” make sure you help Dialogflow annotate only “6:00 a.m.” and “7:00 a.m.” You wouldn’t identify “for 6:00 a.m.” in one case and just “7:00 a.m.” in the other. Dialogflow needs only the numbers and the a.m./p.m. designator.

- Make multiple training phrases for each entity.

- Include all possible synonyms and variations for each entity. Dialogflow has some intelligence in identifying synonyms, and you can turn on a “Define Synonyms” switch to correlate synonyms with a particular entity.

- Make entities as specific as possible. Entities that are too general degrade Dialogflow’s machine learning performance.

- Avoid putting in meaningless text as part of an entity phrase. Dialogflow already takes care of filler words and phrases such as “Hmmmm,” “let’s see,” and “please.”

- Use a variety of examples for how people say things. For example, when using an @sys.time entity, provide examples that include “a.m.,” “p.m.,” and “o’clock” because people will use all three ways to express time.

- Make composite entities only one level deep. Combining entities into multiple levels, such as @animal.genus-family-order-class, goes too deep and degrades Dialogflow’s performance.

In Summary

- Entities add specificity to conversations with a virtual agent.

- Dialogflow supports three types of entities: system, developer, and session.

- Enter entities into Dialogflow before intent phrases so that Dialogflow can properly identify the entities in the training phrases.

- Avoid making entities too complex so as not to degrade your bot’s performance.

- Identify all variations and synonyms on how to say things as you create your bot.

What’s Next

The next article in this series will appear in mid-September and will focus on how to enable Dialogflow’s speech understanding and voice synthesis capabilities so that you can use voice as the input and output interface for your bot.