With Google’s Contact Center AI (CCAI) coming up on its

first birthday, Google today announced it’s putting some party clothes on the solution, introducing updates to two underlying elements: the Dialogflow suite for creating conversational interfaces, and Cloud Speech-to-Text technology.

Conversational AI, such as enabled by Dialogflow, promises to have a considerable impact on how companies deliver individualized customer experiences at scale, as industry analyst Brent Kelly, of KelCor, has been exploring in his ongoing No Jitter series,

Decoding Dialogflow. Messaging applications, speech-enabled assistants, intelligent virtual agents, and chatbots fall under the conversational AI umbrella, manifest in Google’s world as CCAI.

Google shared details on the five product updates in a blog posted this morning, reporting that the upgrades have the potential to boost speech recognition accuracy by more than 40% in some cases.

Contextual Understanding for Virtual Agents

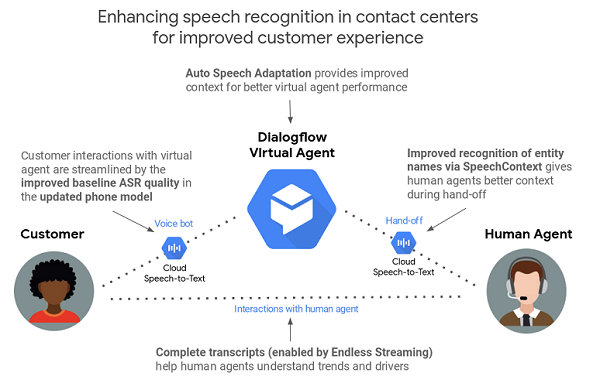

In Dialogflow, the focus is on improving speech recognition in virtual agents. Automated speech recognition (ASR), a necessity for virtual agents, is more difficult to do well on noisy phone lines than in a lab, with no guarantee of a positive customer experience even with near-perfect accuracy, as Dan Aharon, product manager, speech products, and Shantanu Misra, product manager, Dialogflow, noted in this morning’s post. To address these issues, Google is updating Dialogflow with Auto Speech Adaptation, the product managers wrote.

In essence, this update is about being able to deliver stronger contextual information to the virtual agent -- enabling it to better determine that a customer calling about a product return has said “mail” and not “male” or “nail,” they explained. Speech adaptation is the learning process involved in helping make virtual agents more contextually aware.

With Auto Speech Adaptation, Dialogflow will be able to help virtual agents understand context by accounting for all training phrases, entities, and other agent-specific information, the product managers wrote. Activating this feature, which is in beta, requires toggling the “Enable Auto Speech Adaption” button from the default off setting to on.

As noted in the blog, Auto Speech Adaptation is proving a boon to Woolworths, the largest retailer in Australia with more than 100,000 employees. Woolworths, in conjunction with Google, has been building a virtual agent solution based on Dialogflow and CCAI, and has seen “market-leading performance right from the start,” said Nick Eshkenazi, chief digital technology officer for the retailer, in a prepared statement. As benefits, he cited accuracy of long sentences, recognition of brand names, “and even understanding of the format of complex entities, such as ‘150g’ for 150 grams.”

On top of that, Eshkenazi added, “Auto Speech Adaptation provided a significant improvement … and allowed us to properly answer even more customer queries. In the past, it used to take us months to create a high quality IVR experience. Now we can build very powerful experiences in weeks and make adjustments within minutes.”

Transcription Accuracy for Human Agents

Improving contextual information is also at the heart of a trio of enhancements for Cloud Speech-to-Text -- but here in support of human agents rather than virtual ones (although they will benefit customers using Dialogflow for voice-based virtual agents, too), the product managers wrote. The goal is to ease the manual tuning process, via use of SpeechContext parameters, for developers. The SpeechContext updates are classes, boost, and expanded phrase limit. All are in beta.

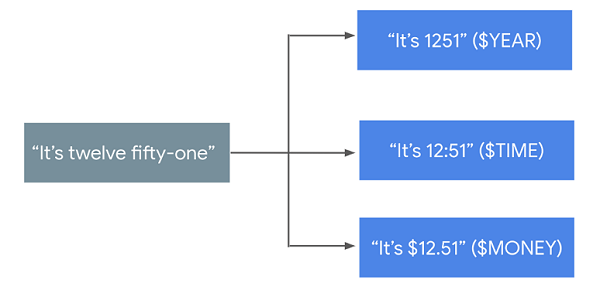

- Classes -- delivery of pre-built entities reflecting popular or common concepts that will provide contextual information enabling Cloud Speech-to-Text to recognize and transcribe speech input more accurately. As an example, shown below, the product managers shared how use of classes could better refine a statement such as “It’s twelve fifty one” for transcription.

Google has created a number of classes for providing context around digit sequences, addresses, numbers, and money denominations, they wrote.

- Boost -- This feature will let developers set a speech adaptation strength for their use case, increasing the likelihood that Cloud Speech-to-Text captures certain phrases for transcription.

- Expanded phrase limit -- This update relates to the piece of the tuning process that allows developers to use phrase hints to increase the probability that the transcription engine will capture commonly used words or phrases associated with their businesses or vertical industries, the product managers wrote. With this update, Google has grown the maximum number of phrase hints per API request from 500 to 5,000. With the increase, developers will now be able to optimize transcription for the thousands of jargon words, including product names, that aren’t common in everyday conversation, they added.

Additionally, Google announced Cloud Speech-to-Text baseline improvements for IVRs and phone-based virtual agents. Optimizing the phone model for the short utterances typical of such conversations has boosted model accuracy by 15% for U.S. English on a relative basis, on top of

previously announced improvements, the product managers wrote. And, beyond the adaptation-related enhancements, Google has added support for endless streaming and the MP3 file format within Cloud Speech-to-Text. Both are in beta.