If there was a “term of the year” award for the communications, collaboration, and customer experience space, “generative AI” would win for 2023 in a landslide. This past year saw a slew of gen AI announcements from just about every vendor in the market. But, as we hit the peak of the hype curve, there are very real questions about gen AI heading into 2024, answers to which will determine if the hype translates into actual adoption and customer benefit.

Will companies adopt it?

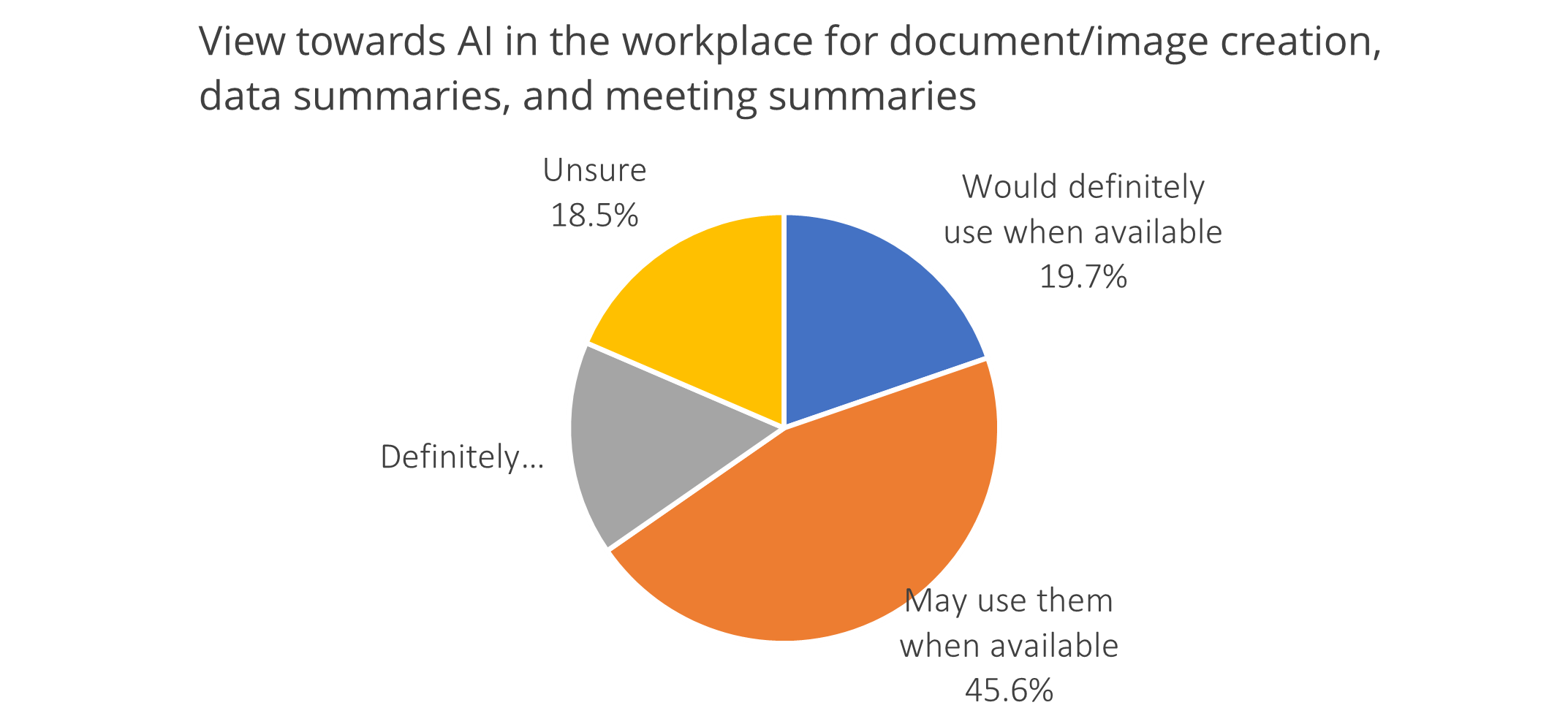

Metrigy’s Customer Experience Optimization: 2023-24 - Consumer Perspective study earlier this year of ~500 people in the United States found widespread awareness of generative AI but significant concerns related to privacy, security, and trust. Adoption plans were limited, with just 19.7% saying they would use generative AI for content creation and meeting/chat summarization if available. 16.1% said they would not use it. Almost 41% of participants said that for them to trust gen AI, the technology would require human oversight of content creation as well as limitations on how the tools collect data. Nearly 32% say they will never trust it.

Specific concerns include accuracy of generative AI responses, security of corporate information that may be available to large language models, and privacy of information captured by generative AI tools such as meeting transcripts and interaction critiques.

What’s the ROI?

As companies get their hands on generative AI assistants they are beginning to focus on ROI, especially when evaluating vendors who charge for AI assistants. We’ve seen in our research over the years that ROI for investments in collaboration applications and features is often difficult to quantify. Some companies look at metrics such as average time spent on repeatable processes to see if they can identify reductions. Other areas may include overall revenue, reduction in costs or staff time, or metrics specific to customer interactions such as first call resolution and customer satisfaction scores.

As generative AI assistants go beyond meeting and chat summarization and transcription, identifying ROI should get easier. For example, employees can identify improvements in data analysis and speed of content creation. IT staff can measure improvements in mean-time-to-repair (MTTR) and administrative tasks from the use of generative AI tools for application and network management.

Even for those adopting free tools there will be some associated costs in terms of user and IT training, IT support, and administration, and to meet security, governance, and compliance requirements for generated content such as meeting transcripts or chat summaries.

Does it work?

We’ve been using a generative AI meeting assistant at Metrigy for a few weeks now, and while the meeting summaries are extremely helpful and save time spent taking notes during a meeting, they do require some human intervention to fix errors in transcription.

For example, here’s a snippet of a summary of a recent meeting (names modified to protect the innocent):

The team also discussed creating templates specific emails. The team discussed the use of play-doh, toys, and color mixing.

Now, we have a lot of fun here at Metrigy, but we don’t typically use Play-Doh in our meetings (though some use it with their kids and grandkids!). It’s fun to share these kinds of errors internally, but obviously sharing meeting notes with clients requires some cleanup, reducing the time-savings value of the assistant.

Another issue we’ve identified is in the use of meeting summarization for hybrid meetings when some are remote, and some are together in a meeting room. Most of the generative AI tools available today (Microsoft Copilot being the exception) lack the ability to identify individual in-room speakers (who are speaking into a shared microphone) and therefore treat all conversation in the meeting room as coming from one person (but those joining remotely are individually identified because they join the meeting as an individual). To address this problem Microsoft has introduced a capability called Intelligent Speakers (in conjunction with microphone partners). The Microsoft approach requires capturing someone’s voice and associating it with their identity so that Copilot can discern active speakers within a room. However, the capture of voice prints may create potential security and privacy concerns.

How do we handle security and compliance?

The last major question to watch going into 2024 is how companies, especially those in regulated industries, will address generative AI content management. For example, if a meeting generates a transcript and summary, where is that stored? Who has access? How is it retained and made available for eDiscovery if required? How long is it retained? How does the company protect against data loss from inadvertent sharing of meeting information?

Beyond that, IT and business leaders continue to express concern over the training of models and how company data is protected. We expect vendors to continue to message (and differentiate) around their security, governance, and compliance capabilities and partnerships.

Conclusion

As we head into the second year of generative AI it’s time to move from hype to benefit. Doing so will require ensuring that IT and business leaders understand risk and reward, have approaches to measure benefit, and are capable of deploying generative AI tools in a manner that is consistent with security and compliance requirements.

About Metrigy: Metrigy is an innovative research and advisory firm focusing on the rapidly changing areas of workplace collaboration, digital workplace, digital transformation, customer experience and employee experience—along with several related technologies. Metrigy delivers strategic guidance and informative content, backed by primary research metrics and analysis, for technology providers and enterprise organizations