Yellow.ai announced Orchestrator LLM, a generative AI agent model that can determine “the most suitable next step while engaging in personalized, contextually aware conversations.” Orchestrator LLM is a customer facing chatbot that can analyze customer conversations, recognize multiple conversational intentions, and guide users toward resolving their service/support issue.

To do so, Orchestrator LLM accesses past interactions (customer history) and use those previous queries to add context to the current customer conversation. Orchestrator LLM does not require prior training and can determine how best to respond to a customer’s requests – perhaps by retrieving information from a knowledge base, initiating a new conversational flow, or by escalating to a live agent.

No Jitter (NJ) had a few questions regarding how Orchestrator LLM worked. Yellow.ai CEO Raghu Ravinutala responded via email.

NJ: The press release says that Orchestrator LLM needs “zero training” and has no requirement for “prior training” mean? When we spoke with Yellow.ai back in January, you said Yellow.ai's solutions use fine-tuned models trained on enterprise data…and then retrieval augmented generation (RAG) on top of that to ground the responses.

Raghu Ravinutala (RR): Orchestrator LLM is another in-house LLM by Yellow.ai trained on top of Llama 2.0, an LLM which is capable of intent identification based on prompt/description. Hence, when added to a bot, it doesn’t require training as this LLM takes care of intent identification helping the bot understand user queries with higher accuracy. It can recognize intent, drive the conversation to a knowledge base question, manage casual conversation, including small talk, and smoothly transition from off-topic discussions back to the main subject while leading the conversation.

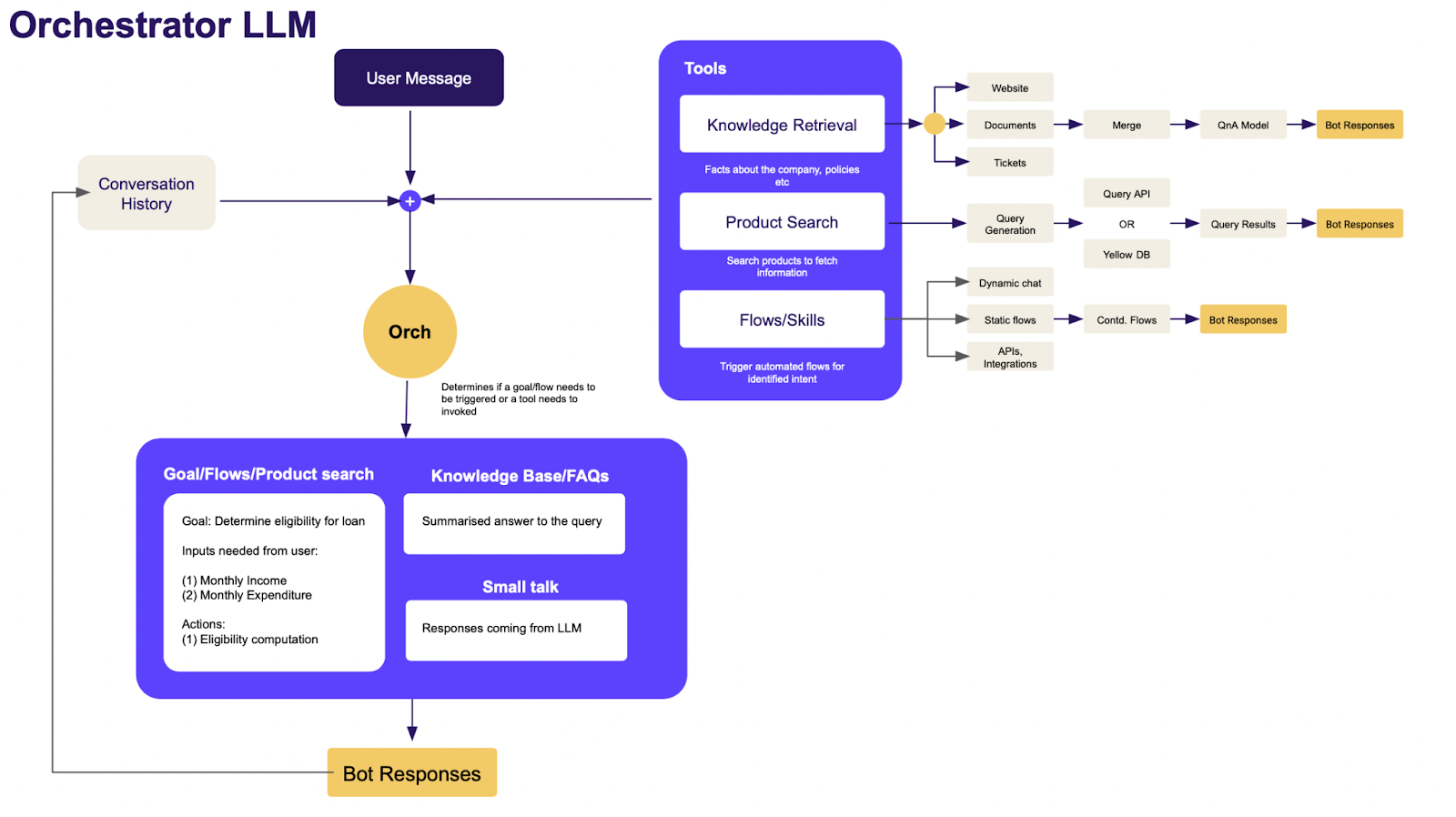

NJ: I’m looking at the architecture graphic on the Orchestrator page and I don’t understand what it’s illustrating. Is the Orchestrator LLM an LLM that acts like a person and directs/assigns other models to handle interactions? Or is the Orchestrator LLM itself the “AI agent” that handles different incoming interactions and then decides what tool (knowledge base, product search, live agent) to deploy to handle those interactions?

RR: When a user request is received, all the necessary information regarding tools and conversational flows, documents in the knowledge base, and past conversational history are provided in the form of a system prompt, which the AI agent model will process automatically. This is how Orchestrator LLM decides its action based on the intent in the user's message and responds accordingly.

The architecture [diagram] showcases the journey of a customer query giving multiple options depending on the intent. For instance, if the customer query is on a particular product, the Orchestrator LLM will identify that intent, trigger the ‘Product Search,’ fetch the appropriate response, and respond to the customer, all dynamically.

NJ: How does Orchestrator “decide”? What are those decisions based on?

RR: Each bot operates on a system prompt containing the tools’ description and the user's conversation history, enabling it to determine the appropriate tool to activate in response to incoming user messages. These decisions hinge upon user intent – e.g., cancel an order, connect with an agent, or retrieve an account ID.

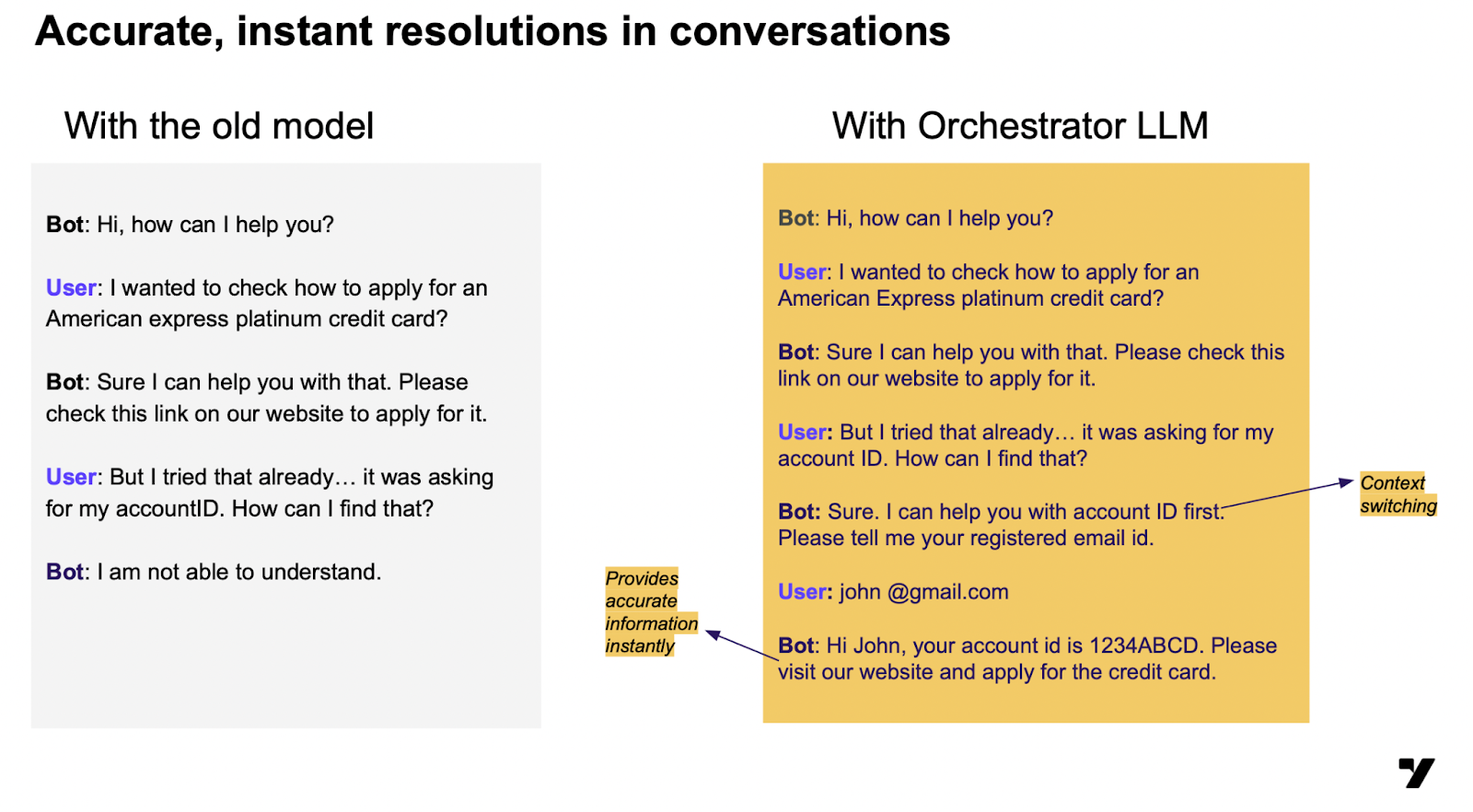

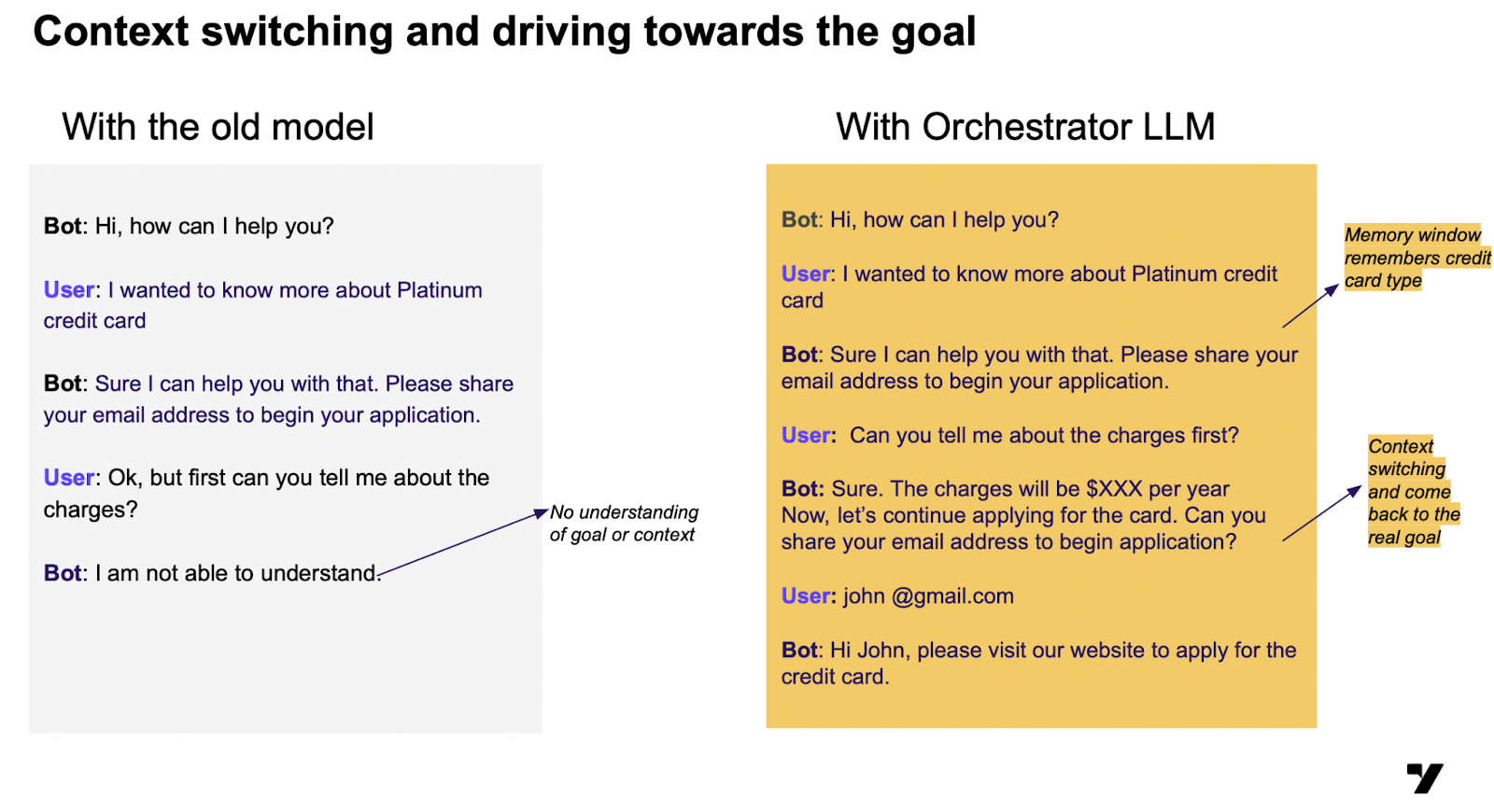

(NJ: The following graphics include sample conversations, provided by Yellow.ai, to help illustrate that process. They compare the capabilities of a traditional chatbot to the experience Orchestrator LLM can provide.)

NJ: What does ‘system prompt’ mean? And, how does the enterprise make sure Orchestrator doesn’t say something it shouldn’t?

RR: The term 'system prompt' is just a way to describe how things work. Orchestrator LLM can orchestrate across a number of goals at the user level, across multiple AI tools or models that could be from third party vendors or other in-house Yellow.ai LLMs, and across enterprise applications like payment, CRM etc.

Orchestrator LLM gathers information from existing tools, past conversations, and the latest message to create a prompt. This prompt is then used to generate a response, which makes the conversation much more dynamic and fluid. For enterprises or brands, they can handle many more customer queries as well as handle contextual switching by leveraging Orchestrator LLM-enabled bots than they would have done in the past.

Orchestrator LLM allows enterprises complete control over the actions and answers that the bot can give. It identifies the intent of a particular user message from the actions and answers defined in the bot. Any answers not defined in the bot will still go to knowledge base retrieval or fallback restricting the bot from answer anything unwanted.

Want to know more?

No Jitter spoke with Yellow.ai’s Ravinutala earlier this year.