The Voice & AI show kicked off this week in Washington, DC, and No Jitter (NJ) attended some of the conference sessions virtually. This article summarizes some of the key points made in two of the day one sessions. In his session, Ryan Steelberg emphasized the human factor in generative AI. In another session, Bret Kinsella showcased his idea that the large language model (LLM) “wars” are a proxy for the bigger battle among the major cloud providers.

Humans In, On and Out of the Loop

In his presentation,"Synthetic Media and the New Industrial Revolution," Ryan Steelberg, CEO and President of Veritone, talked in part about the need to work as industry to develop AI, and generative AI solutions in particular, ethically and responsibly. “We are in the middle of helping set these standards,” he said. “It's not just up to the big hyperscalers.”

Steelberg also discussed how generative AI is likely to change the way people are involved are in producing content – referencing the ongoing writer’s strike in Hollywood – but emphasized that generative AI is fundamentally about empowering humans, not replacing them. Not yet at least, and not for all roles, though Steelberg did lay that out as one likely result of the AI revolution which he characterized as the “fifth” Industrial Revolution.

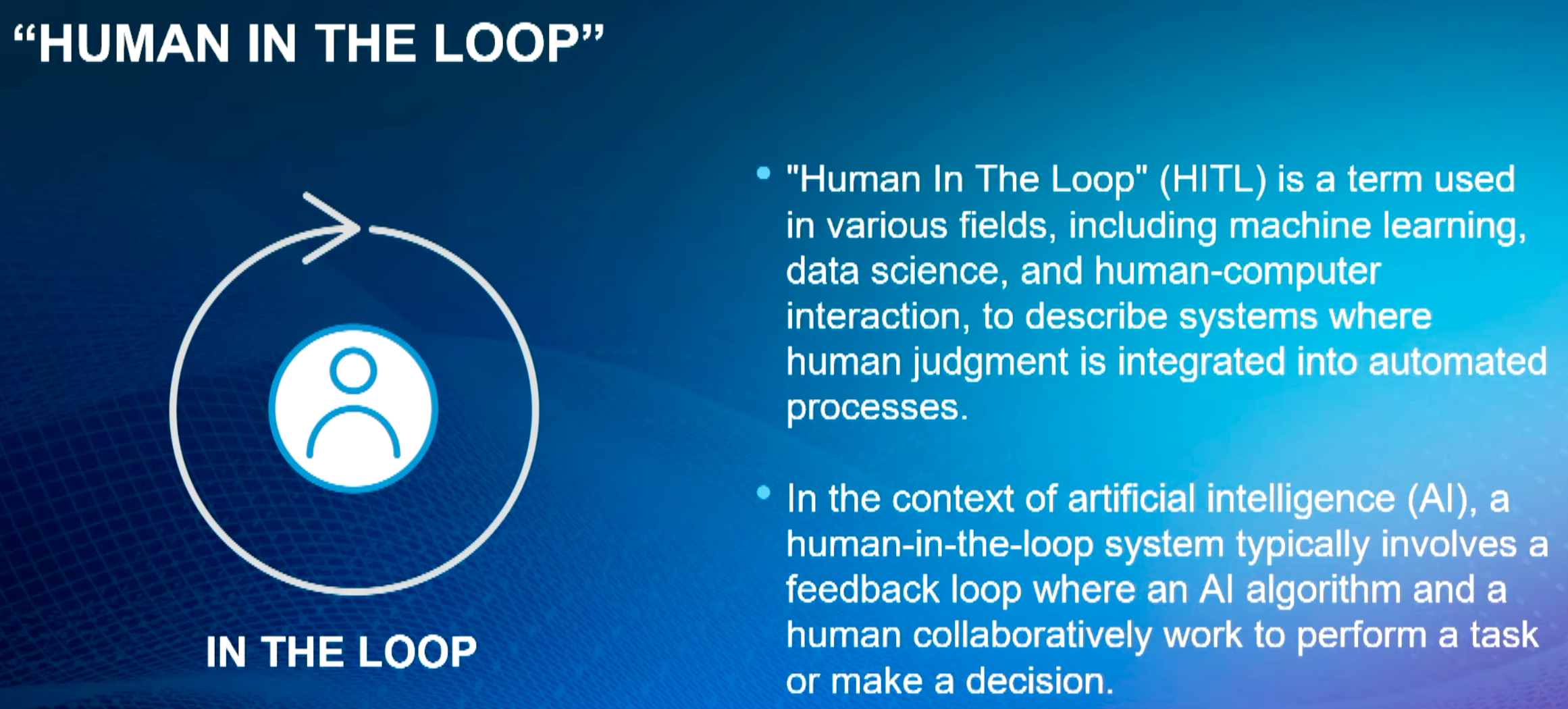

“For our entire history, humans have been ‘in the loop’ – we are part of the process,” he said. And what he meant by that, as illustrated in the following graphic, is that humans are “required or even demanded to be part of the equation.”

This is where many of the existing “agent assist” and summarization use cases of generative AI technology plays a role, which NJ has discussed in its conversation with Genesys, to name just one example. In these cases, the agent is using (or not) what the generative AI-powered solution presents.

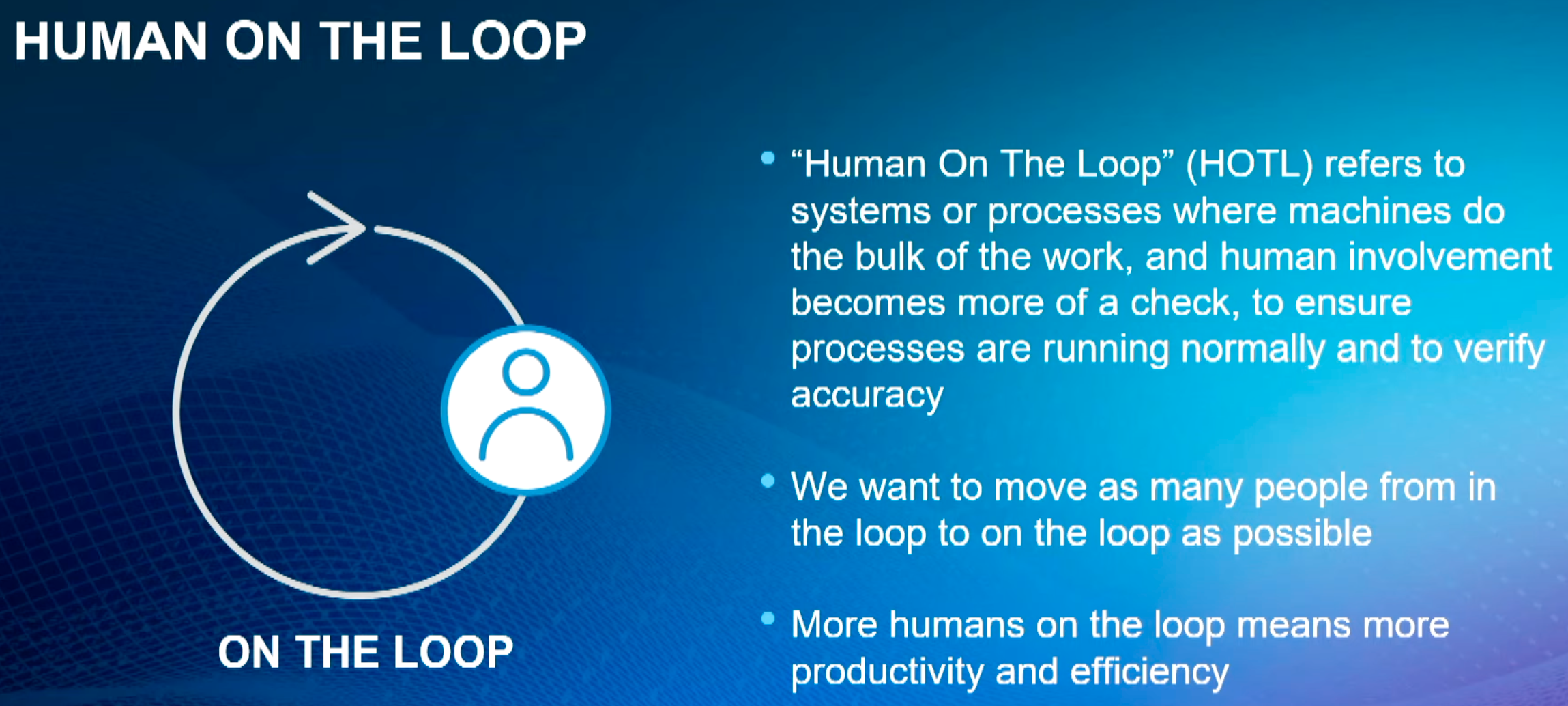

Steelberg argued that this approach can also incorporate what he calls “human on the loop,” where the machine does most of the work while the human involvement is a check against what the AI generates. Conceptually, this is similar to an assembly line which, incidentally, was part of what he defined as the second Industrial Revolution. Steelberg noted that saying that “process automation has been doing this [human in the loop] for years.”

But with respect to AI, “people turn into supervisors, they're monitoring the [AI], they are a gateway – but it's a slow migration from being in the loop to being on the loop.”

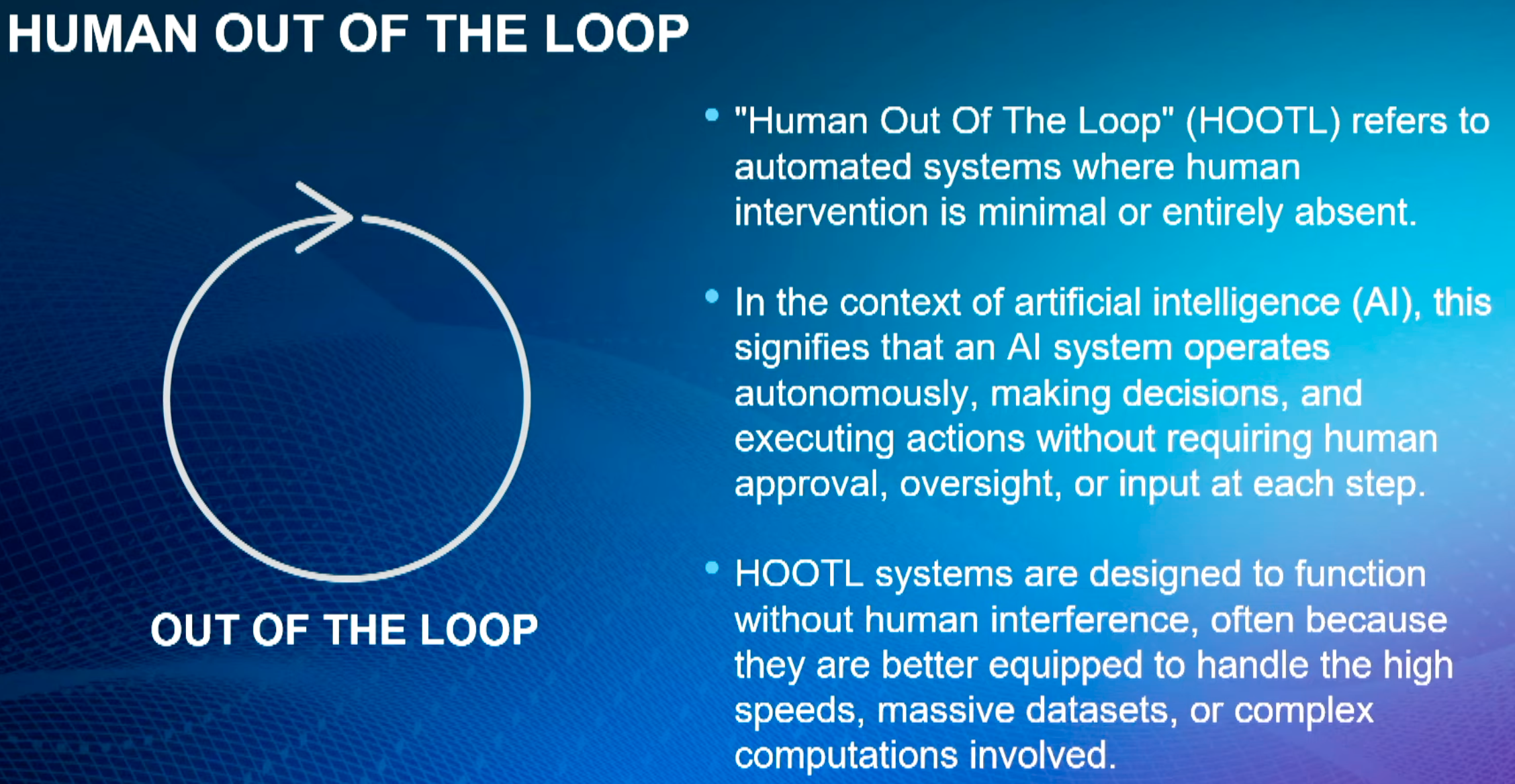

And there are use cases in which there is no need for the human to be in the process at all which can be “scary to a lot of groups [when] there is no need for human effort relative to the process.”

When generative AI is layered on top of these concepts, Steelberg said, “we have to understand the impact these solutions are going to have in our businesses, on our staff, in our communities, and in our nation.”

But that awareness ties back to how Steelberg began his presentation by placing AI, and generative AI in particular, as the “fifth” Industrial Revolution. He made the point that what is so different today is the rapid pace at which technological changes occur relative to hundreds of years ago when the pace of innovation and adoption was much slower, yet we benefit from those foundations.

Similarly, the rapid innovation in and adoption of generative AI benefit from the pervasiveness of the Internet, cloud computing and hyperscalers, fiber and cellular networks, etc. But just as there were great benefits to the prior Industrial Revolutions, so too were there negative and, in many cases, unintended consequences.

“I guarantee that because of the speed at which we're throwing [generative AI solutions] out there, there's going to be carnage at some point,” Steelberg said. “I do believe passionately that ultimately, it'll be for the betterment of our communities, our companies and our society. But let's be clear, we are writing history together as a group.”

Generative AI and the Ongoing Cloud Provider War

In his presentation, Natural Language is Eating the World - How Generative AI is Reshaping Customer Experience, Enterprise Operations, and Everything Else, Bret Kinsella, CEO and Founder of Voicebot.ai, summarized the key ways companies are putting generative AI to use: respond, resolve, discover and create/innovate.

Those broad trends include many of the uses covered by NJ: meeting summarization, assisting agents, transcriptions, assistance in writing email, documents, presentations, and using generative AI to analyze large amounts of data to identify trends and/or patterns.

“The people who actually get the most out of these products are the ones who are already skilled,” Kinsella said. “So [generative AI] upskills and brings the floor up for people who aren't skilled in certain areas, but people who are the most skilled will also do better.”

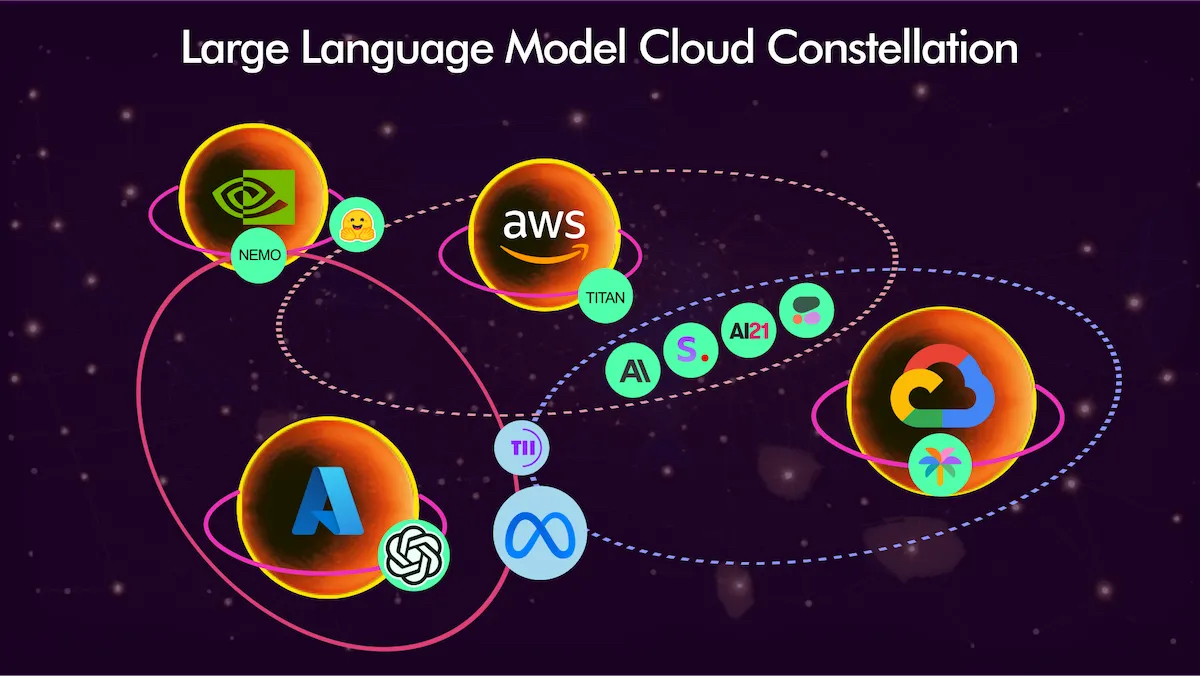

Perhaps one of his most insightful comments is illustrated in the “constellation” graphic below. Those are the big cloud hosting companies and the foundation models (also called large language models or LLMs) gravitationally captured, so to speak, by those hyperscalers.

“The most important companies in this space right now are [not] the foundation bottle providers,” Kinsella said. “They're actually the big cloud hosting companies and so these are the ecosystems they have.”

From left to right, there is Nvidia with its NeMoFramework and HuggingFace which is a platform that provides “tools that enable users to build, train and deploy machine learning models based on open-source code and technologies” (source).

The next “planet” is AWS with its own Titan models and its Bedrock approach which, among other things, facilitates enterprises choosing which LLMs they want to use (see this NJ article for more detail). Some ofthe other models illustrated include AI21 Labs, Stability.ai, Anthropic, and Cohere, and these models are also tugged upon by Google Cloud.

The main satellite of Google Cloud is Google’s own PaLM 2; the more distant one is TII’s Falcon. Microsoft, of course, has its Azure OpenAI platform and is tightly tied with OpenAI itself.

Meta’s approach is a bit different with Llama 2, which is “open source” but there is some contention about how open it is: “few ostensibly open-source LLMs live up to the openness claim” (per the linked IEEE article). This article, too, disputes the open source claim.

“When we think about these ‘generative AI wars’or the‘LLM battles,’ they're really [at least in part] proxy wars [among] the cloud providers,” Kinsella said. “But the big dog here is actually Nvidia because they all rely on Nvidia GPUs.”

Want to know more?

- Bret Kinsella posted on his Substack, Synthedia, in much more detail regarding his idea that the “LLM wars exist in the larger context of the cloud computing wars.”

- Google Cloud has added Meta’s Llama 2 and Anthropic’s Cloud 2 chatbot to its platform.