Welcome to the second of our Conversations in Collaboration. We began with Cisco's Lorrissa Horton, who talked about the company's approach to data security in AI.

This month, No Jitter (NJ) spoke with Behshad Behzadi, Vice President of Engineering, Generative Conversational AI, Google Cloud about the use of generative AI (Gen AI) at Google, in its Contact Center AI offering and, more broadly, across enterprises.

The Gen AI category has a small number of foundational models, also referred to as large language models (LLMs), which are being used in many applications and use cases such as generating text, images, and speech. One of the biggest categories for generative AI in the contact center space involves finding ways for the technology to make online customer interactions easier and more productive. “This could be on websites with chatbots helping you find your answers quicker,” Behzadi said.

(Editor's Note: Artificial intelligence has a lot of specific, descriptive terms. Download our handy guide to AI vocabulary.)

But he also drew attention to another area where Gen AI could provide value. “Or maybe you have an expert, virtual assistant inside a company helping employees with all types of everyday questions. Or when someone new joins a company, it’s typically several months before they’re

productive, so that virtual assistant could help them when they get stuck.” (Editor’s Note: See the NEC news in No Jitter Roll.)

According to Behzadi, another category where Gen AI is proving useful is by working with complex structured and unstructured datasets. The models can basically make “all those complex things searchable and accessible, which helps you get insights out of them and make decisions on that data,” he said.

A third category of Gen AI use cases involves leveraging Gen AI to generate content more quickly – everything from articles, summaries (meetings, etc.) and marketing materials to code. “As a coder and developer, you start typing parts of your code, and then you all of a sudden see the next several lines of code predicted – it just appears. It’s magical.”

Prepare for the Takeover of the Bots

This ties into another of Behzadi’s key points: “It's getting cheaper and faster to get to value for making a chatbot or a voice bot which can actually take over a non-small fraction of your calls.”

The key phrase there is “take over” because speech recognition and natural language understanding (NLU) – which are both types of AI – have been used for many years to triage contact center calls and/or provide self-service options. (Keep this point in mind because Behzadi circles back to it later in the conversation.)

In his keynote at Enterprise Connect 2023, Behzadi demonstrated a fully autonomous, AI-powered voice interacting with a human to provide real-time assistance over the phone. That AI used Google Cloud’s Universal Speech Model (Chirp) to speak, but it was Gen AI under the proverbial hood that figured out what the human was saying and then generated the responses.

While that was impressive as a demonstration of what may eventually be possible, Behzadi said in this conversation that there “are certain guardrails and certain business logic that must be exactly respected. That’s really where the bigger part of our work goes on and, of course, making sure it is safe. You don’t want it using toxic language or becoming racist.”

He continued. “This is not new work here. We have Google Assistant and much of the work done in really understanding all those, you know, types of responsible AI filters will apply also here to Gen AI.”

How to Ensure Responsible AI

NJ asked more specifically about the guardrails Behzadi mentioned. He first distinguished between the available consumer-focused, Gen AI products versus the enterprise-class, cloud-based AI products on offer from companies such as Google.

“People, when they talk Gen AI today, it’s around ‘my employees are using this, entering company information, etc.’ That’s obviously not good, but that’s a consumer product not a cloud product,” he said. “When a company builds and uses Google Cloud there is no [Google] usage of any customer relation interaction information, data is not being used for training the models nor is it being used for any other customer.”

He went on to describe how the base model (Google’s LLM) that used by an enterprise is frozen across customers. If the customer wants to “optimize [their own instance] through fine tuning and adapt it to their own use cases, then they can do that, and it would only be for their brand.” (Keep this point in mind, too.)

In a follow-up email, Google further stated that for a given query the model first performs a search and retrieval on the enterprise’s relevant documents, and then the LLM tries to generate an answer grounded in those retrieved results. The enterprise controls which of its documents are accessible to the LLM.

Google covers AI security and safety in its Security AI Framework (SAIF), which is inspired by the security best practices Google has applied to its own software development while also incorporating its understanding of some identified security mega-trends and risks and how they might relate to AI systems.

This document summarizes some of the threats related to AI and how Google recommends defending against them. For example (NJ is paraphrasing), organizations should monitor the inputs and outputs of generative AI systems to detect anomalies and create a personalized threat profile (Google has a solution for this) to anticipate attacks. One potential type of attack might use an injection technique like SQL injection and organizations can adapt existing mitigations (input sanitization and limiting) to help defend against prompt injection style attacks. Google also notes that attackers will likely use AI to attack so defenders must do likewise.

How Google Enables Business Login Within Its Bots

Then, looping back to a question NJ asked about how the Gen AI system is made to adhere to safety, compliance and business logic requirements, Behzadi referenced Google CCAI’s existing Dialogflow, which enables the building of virtual agents to handle concurrent text/audio conversations with customers. And as that linked documentation states, “A Dialogflow agent is similar to a human call center agent. You train them both to handle expected conversation scenarios, and your training does not need to be overly explicit.”

During his keynote at EC23, Behzadi gave this example: when a human is hired to work in a contact center, you don't teach them common sense and English. You just tell them the role-based rules they need to follow, and they learn role-specific competencies via experience on the job. He made similar comments in this conversation.

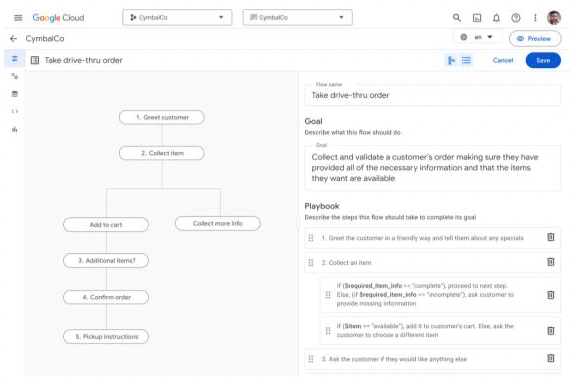

This graphic illustrates how a Gen AI bot can be created. The panel on the left shows the interaction flow. The panel on the right shows how natural language can be used to specify the logic while the AI dynamically handles the potential paths an end-user might take.

“These models have common sense and we have built a system where you can still say this is exactly the business logic you need to follow – not 98% but 100% follow,” Behzadi said. “And you can count on the brain of the model to handle exceptions without having to code for a super complex flow of all possibilities.”

He then expanded on that point with an example which NJ has paraphrased: A company creates a voice bot that asks, “What is your credit card number?” If the customer had anticipated that question as part of the workflow, they would input that information. But if the customer said, “I don’t have my wallet, let me go get it,” the voice bot might respond with, “I’m sorry, I don’t understand, can you say what your credit card number is again?”

The ”old world” bot could be programmed to tell the customer to have their card handy, and then programmed to wait if the customer says “okay, let me get it,” etc. But that was Behzadi’s basic point. In that “old world” every foreseeable contingency would have to be programmed which would involve creating a complex flow of possibilities which might be made easier or more difficult depending on the tool being used. Either way, it would take extra time to create that flow and then test it, etc.

“In the new Gen AI world, the bot says, ‘take your time, I'm here when you’re ready,’” Behzadi said. “The point is you don’t need to encode all of that just like you wouldn’t have to with a person.”

How Google Sees Generative AI Changing the Contact Center

NJ also asked Behzadi about the impact of introducing Gen AI solutions into the contact center and what sentiment analysis could do. (Editor’s Note: See the links below.)

“Contact center automation is maybe the top application of conversational AI directly giving you important cost savings,” he said. “But beyond that, agents are under lots of pressure. They get lots of similar calls – angry people, very often. There are studies around the happiness of agents and many actually leave before one year mostly because of those pressures..”

Gen AI helps in that regard by shadowing agents and offering possible answers, analyzing sentiment, summarizing the interaction, and otherwise making suggestions – “it’s like having a senior, knowledgeable supervisory agent always available to you all the time,” Behzadi said.

Not only might this process improve agent productivity and possibly agent happiness (which might reduce turnover and lead to lower costs over time), but with the Gen AI technology and tools in place, other options become available – recall what Behzadi said earlier about generating insights from datasets.

“If you have a particular agent who is, for example, not very good at explaining a certain deal, you can imagine having these generative AI bots play the role of a trainer,” Behzadi said. “You have it call that agent 10 times in a row and have it ask the agent to explain that deal. It becomes a very personalized experience that helps them become more confident and productive.”

Those insights can be extended into other areas so that the company can begin to understand more broadly what’s going on, such as what topics are talked about and what business areas are getting better or could use more attention.

Behzadi stressed the idea that even if the Gen AI model iterates so that it gets more powerful over time by watching the agents and learning from them, the humans must remain fully in control.

“Maybe those improvements are happening, for example, through human feedback or automated learning, and the model has learned new things. You still want to run a ‘diff’ on a sample of 1,000 conversations or whatever so you can compare the before to the after,” Behzadi said. “That way you can say, okay, it looks better, the new responses can go into production. In my opinion, you would want to have those controls.”

Moving forward, Behzadi said that Google is working on ways to “watermark” Gen AI content so that end users, or customers, can understand which content is human generated or auto generated.

“It's important for every company and every customer to try and understand the implications of these models and how to be very careful about all these things,” Behzadi said. “That's another area where we’re working closely with our customers and really applying what we call being really bold, but responsible.”

Want to know more?

Check out these articles and resources: