Nvidia kicked off its all-digital GPU Technology Conference (GTC) yesterday, April 12, and true to form made a number of product-related announcements across the AI spectrum — including the

news that its Jarvis conversational AI framework is now generally available.

For enterprise communications/collaboration professionals interested in conversational AI, it’s important to understand what Jarvis is and isn’t. Jarvis isn’t just a general-purpose speech-to-text or translation engine. Rather, it’s a complete set of pre-trained deep learning models and software tools developers across a wide range of industries can use to create interactive conversational AI services.

Nvidia has been working on creating a highly accurate voice AI system for years. The company’s CEO, Jensen Huang, has referred to conversational AI as the “ultimate AI,” because of how difficult it is. By contrast, image classification and some aspects of video AI, because they can be trained relatively quickly, are easier. Images are static and an AI can learn quickly the difference between a cat and a dog, but voice requires an understanding of dialect, slang, accents, etc.

Many, many vendors have built conversational AI systems, and some have done a decent job. However, conversational AI vendors often struggle with domain-specific jargon, such as legalese or medical terminology. That’s not the case for Jarvis, which achieves best-in-class accuracy because it’s been built using over a billion pages of text and tens of thousands of hours of speech data across different languages, accents, and vertical industries. Nvidia trained the English model alone on over 60,000 hours in 20 accents.

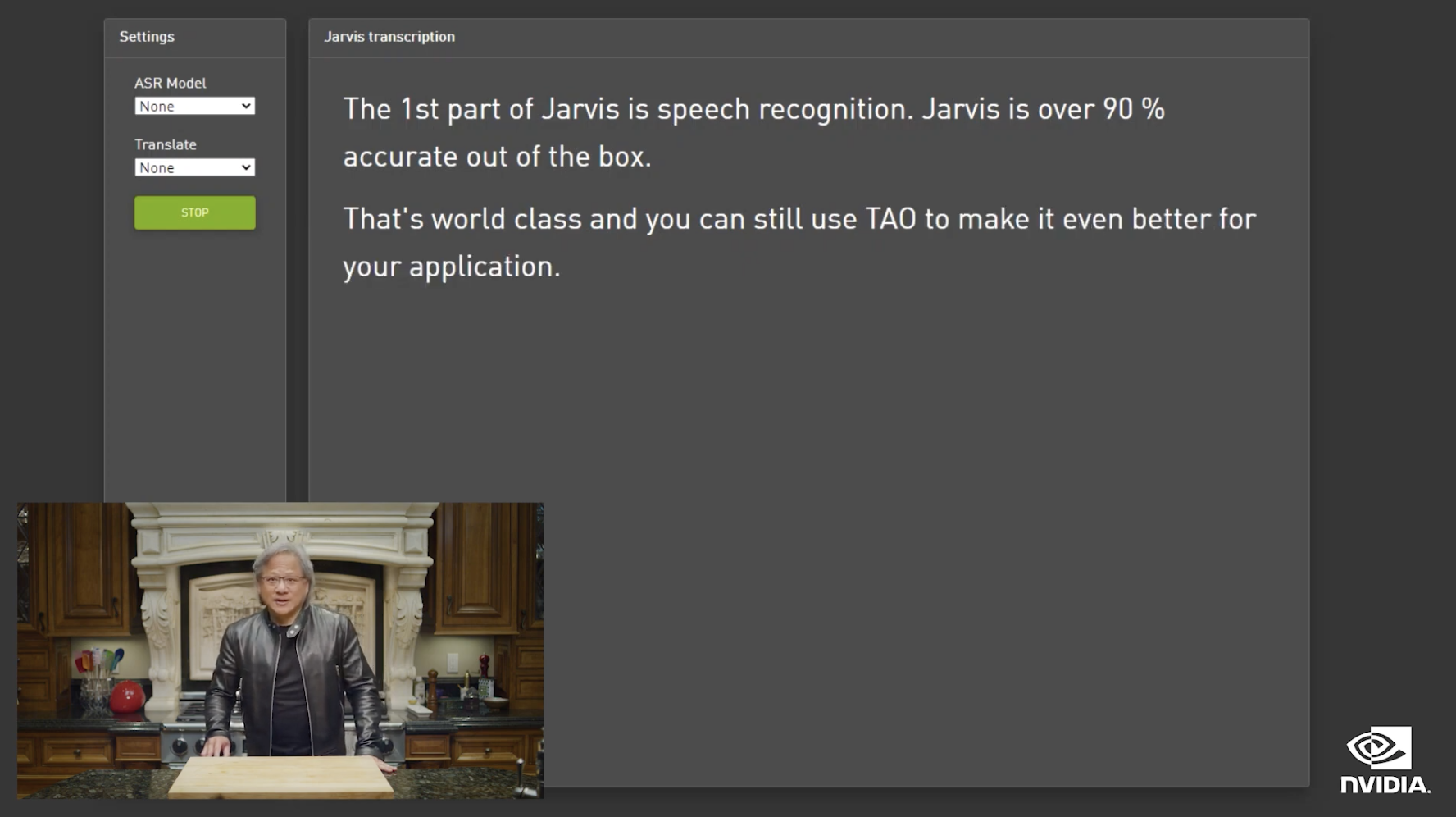

During his keynote, Huang highlighted data showing 90% accuracy for Jarvis on three data sets — customer call center validation sets; a range of podcasts covering topics like money, flights, Kubernetes, and architecture; and what Nvidia referred to as academic data from sources like The Wall Street Journal and LibriSpeech. However, one of the key points about AI is that it doesn’t have to be 100% accurate out of the box. In fact, developers often can use bad data as part of the training set to speed up the process. By understanding how the models were trained and the word error rate, including which words Jarvis came close to getting right and which ones it missed, Nvidia customers can retrain the model for a better experience.

With Jarvis, customers don’t have to build their models from the ground up. The company offers zero-code tools that allow them to adapt Jarvis to their own domains without the burden of hiring deep learning engineers. A newly announced GUI-based framework, called

TAO (train, adapt, optimize), encapsulates the entire workflow of adapting and optimizing the AI models and facilitates the transfer learning. TAO also incorporates federated learning, which lets multiple parties collectively train a global model through data diversity while ensuring data privacy.

In addition to automated speech recognition, Jarvis provides translations between a number of languages — English, French, German, Spanish, Russian, Mandarin, and Japanese, with more coming. One of the really interesting features of Jarvis is bringing controllability and expressiveness to virtual agents and assistants. The latest version lets developers control the pitch, how high or low the voice is, the tempo, pace of speech, and even mix speakers, if enough data is available. This flexibility should vastly improve the quality of interactions between humans and digital assistants.

In early release for over a year, Jarvis has been downloaded over 45,000 times. One of the first adopters is T-Mobile, which is using Jarvis to improve its customer service. “We are always looking to drive the greatest experience for our customers, and to do so, we realized a few years ago we had to reinvent how we were treating customers,” Matt Davis, VP of products and technology at T-Mobile, shared in a briefing. He then went on the explain how Jarvis gives customer-facing people “superpowers” enabling them to provide better service while doing less work. Jarvis does much of the heavy lifting of translation, triaging quickly what the customer needs and other tasks, he added.

Nvidia also announced a partnership with Mozilla Common Voice, one of the world’s largest multi-language voice datasets that’s now available to everyone. During his keynote, CEO Huang urged everyone to record at Mozilla Common Voice to make universal translation possible.

On a related note, Nvidia connected the dots between Jarvis and its collaboration AI framework,

Maxine, introduced last October. Maxine provides a range of functions to make video meetings better. These include super resolution, body pose estimation, noise removal, translation, transcription, and other features. Jarvis is the voice AI that powers Maxine, so customers should expect to have that same rich conversational experience in future video meetings.

Last year, Avaya announced it was using Maxine in its Spaces team collaboration product. At this year’s GTC, Pexip and Touchcast

revealed they are adopting Maxine. Pexip will use Maxine similarly to how Avaya does, with the Nvidia technology bringing advanced capabilities to its enterprise video solution. I expect, in the very near future, these AI capabilities will be table stakes and any vendor that doesn’t have them will be significantly behind.

Touchcast is using Maxine to create what it says is the world’s first AI-powered events platform. Given the shift from physical to at least partially virtual events, Touchcast could have a huge impact on making hybrid events better. The AI-powered Touchcast platform will let event presenters “beam” out of their home offices and into mixed reality sets without needing a green screen.

Watch the video in this news release for a bit of peek at what Touchcast is working on for its event platform.

It’s safe to say that the AI era for communications is here and it will forever change the way we collaborate and provide customer service. Nvidia’s “magic” is simplifying the technology and making it accessible to all developers and companies and effectively democratizing it so AI becomes ubiquitous.