With only 25% of business leaders and 13% of consumers fully trusting generative AI, the Biden Administration’s Executive Order governing the development and use of AI may appear to be a welcome development.

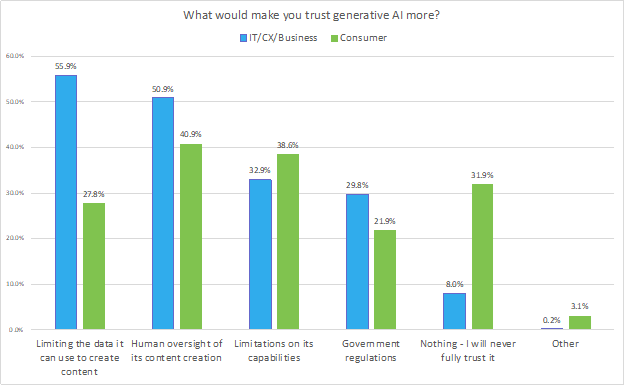

However, according to Metrigy’s Customer Experience Optimization 2023-24 global study of 641 companies, along with the Consumer Perspectives study of 502 consumers, government oversight is not viewed as a panacea by the majority of business leaders and consumers. In fact, only 30% of business leaders and 22% of consumers surveyed say government regulations would make them trust generative AI more. (Although the EO does apply to any type of AI, the rapid speed of generative AI’s adoption and capabilities presumably delivered incentive for the administration’s actions.)

IT, customer experience (CX), and business unit leaders see other areas as more effective at increasing their trust of AI. They include limiting the data AI can use to create content (56%) and human oversight of content creation (51%). Consumers agree on the latter (41%), along with limitations on AI’s capabilities (39%). But in the end, consumers are four times more likely to never fully trust AI than IT, CX, or business unit leaders, so efforts to placate consumers are paramount.

During our interviews with research participants, most said they would prefer AI be managed by a non-government-run standards body, including tech vendors, leaders in academia, researchers, IT leaders, and government officials that would jointly address areas of concern. They said standards bodies would be more efficient than a government task force or agency.

Challenges of the EO

So does an executive order provide value? It may help, if executed properly, but it won’t eliminate risks. And it could potentially do more harm than good by stifling U.S. innovation. Falling behind other countries on AI development because of government bureaucracy would put the country at a disadvantage in a variety of areas, including defense, healthcare, energy, and technology innovation.

Not surprisingly, the government must provide more detailed insights for the EO and how they’re applied, and agencies—including the National Institute of Standards and Technology, Department of Homeland Security, and Departments of Energy and Commerce—have been assigned such tasks. However, they won’t move quickly. The specifics behind the EO’s rules will take up to nine months to establish. And with AI driving new products, services, and applications by the minute, the environment will change more rapidly than the regulations can keep pace.

Even once the detailed guidelines are established, how enforceable will they be, given they won’t be laws? In the meantime, tech vendors have agreed to some voluntary precautions in developing AI models. But the tech vendors aren’t necessarily the problem—it’s the bad actors, and bad actors don’t play by the rules.

AI for Customer Experience

In the CX world, 40% of companies cite content creation as the most valuable use of AI, according to Metrigy’s research. The EO calls for the Department of Commerce to develop guidance for content authentication and application of watermarks to label AI-generated content. Federal agencies will use these tools for their own communications to citizens, hoping to set an example for the private sector. Authentication is a reasonable request to protect copyright materials or artists’ original work. But what about content created from within the guardrails of a company’s knowledge management base? It’s doubtful it would be needed here, but again, it raises the question of where the government will draw the line—and how much the EO could slow innovation.

Aligned with what our research participants want, the executive order does inject limitations on AI’s capabilities by, for example, requiring AI foundational model developers share their safety testing results with the government—though it’s unclear what, specifically, will fall under these regulations. Safety testing in areas of the Defense Production Act, along with any regulations to address homeland security, are legitimate and reasonable uses of government efforts. But will AI platforms used for customer interactions or employee communications require security testing? And how about applications that ride on top of those platforms?

In our study, 35% of business leaders cited data privacy as a concern of generative AI. The EO calls on Congress to pass data privacy legislation. For example, the EO calls for protecting Americans’ privacy by preserving the privacy of AI training data, as well as funding a Research Coordination Network to advance rapid breakthroughs, while protecting privacy.

Generative AI’s Impact on Jobs

Where tech vendors and buyers will see some relief is in loosened requirements for foreign workers, enabling companies to more easily hire experts in AI development. However, the application of those tools causes another problem: 25% of business leaders are concerned that generative AI will take jobs from people.

Here, the EO directs actions (but doesn’t specify who is responsible for executing those actions) to “develop principles and best practices to mitigate the harms and maximize the benefits of AI for workers by addressing job displacement; labor standards; workplace equity, health, and safety; and data collection. These principles and best practices will benefit workers by providing guidance to prevent employers from undercompensating workers, evaluating job applications unfairly, or impinging on workers’ ability to organize.” This is perhaps the most unenforceable section of the EO—and its vagueness and lack of responsible party merit skepticism.

There will be and already has been job displacement because of AI—and also job creation. In fact, our research already shows that those companies not using AI in their customer engagement initiatives will have hired 2.3x more agents in 2023 than those using AI. However, those agents remaining are seeing an increase in their compensation, as they are handling more complex customer issues—along with the fact that 53% of them now apply sales quotas to their service agents. The government can’t prevent employers from undercompensating workers; the free market is what will prevent that.

Government’s Example is Lacking

The EO also instructs government agencies to leverage AI by issuing guidance for agencies’ use of AI, helping them acquire AI products and services faster and more affordably, and accelerating the hiring of AI professionals. The Biden administration issued a “Citizen CX Mandate” in late 2021 to accelerate digital-first strategies, gather and analyze customer feedback, and modernize the contact center. AI will go a long way to helping to achieve these mandates.

However, our research shows that the government is not leading by example. Only 42% of federal, state, and local governments have increased their CX spending from 2022-2023, compared to 65% of all industries. Government agencies are well behind other sectors for virtual assistants to help citizens, with only 16% using or planning to use in 2023, vs. 45% of all industries. Likewise, they are behind on agent assist, with only 21% using the technology compared to 52% of all industries. And, fewer than 30% of government agencies have self-service channels, vs. half of all industries.

Consumers and industry practitioners are open to standards and guidelines that will help increase trust in AI and reduce the likelihood of misuse. Whether a government, which already isn’t leading by example, is the best authority remains questionable. The order, so far, lacks detail to understand responsible parties, enforceability, and accountability, and is at odds with some of Metrigy’s key research data.

That consumers and industry practitioners would welcome some sort of oversight to ensure that AI is more trustworthy is not in doubt. But whether a government that isn’t leading by examine is the best organization to do it is. This order isn’t addressing what our research has identified as the real concerns practitioners have.