Pexip was among five companies selected as finalists in the “Overall” category for the

2020 Best of Enterprise Connect Award. You may have heard of

Pexip, but you probably are not familiar with Adaptive Composition, the innovative video framing technology the company submitted for judging. In this post, I’ll explain what the technology does, and why it is compelling.

Pexip in Context

Pexip is a video platform and services provider established in 2012. The company’s founders had deep roots in Tandberg, a Norwegian-headquartered video communications solution provider acquired by Cisco in 2009 for $3 billion. Since its founding, Pexip has had a mission of empowering people to be seen and to engage with each other in a better way. The company’s motto is that any user on any platform should be able to connect with any other.

Pexip created video meeting software, branded Pexip Infinity, for installation on premises or by service providers that could then offer their own hosted video services. At the end of 2018, Pexip merged with Videxio, a Pexip partner that offered a hosted video communications and collaboration service to enterprises under the Videxio brand and to service providers under white label-branding agreements.

Today, Pexip provides video meeting products and services in three categories:

- An end-to-end video meeting solution that can be deployed on prem, as a service, or as a private instance in the Microsoft Azure, Amazon Web Services, or Google Cloud Platform clouds. This solution is based on the Infinity platform

- Video infrastructure (i.e., video bridging and transcoding) as well as APIs that allow Pexip video solutions to be embedded into or integrated with third-party solutions

- Video gateways for interoperability with Microsoft Teams and Skype for Business, as well as Google Meet

Pexip targets large enterprise, government, and healthcare organizations, reporting that it has 3,400 customers whose headquarters are located in 73 different countries. Pexip users can be found in 190 countries. The company claims 10 of the Global Fortune 50 and 15% of Global 500 companies as customers. In 2019, Pexip had a 99% customer retention rate along with revenue of $42.1 million; 97% of Pexip’s revenue is recurring.

Earlier this month, on May 14, Pexip had a successful initial public offering of its stock, raising $100 million. The entire process — from the investor roadshow, to meetings with bankers, to the opening bellringing — was conducted virtually using Pexip’s own meeting solution.

Pexip Adaptive Composition: Framing All Participants Properly

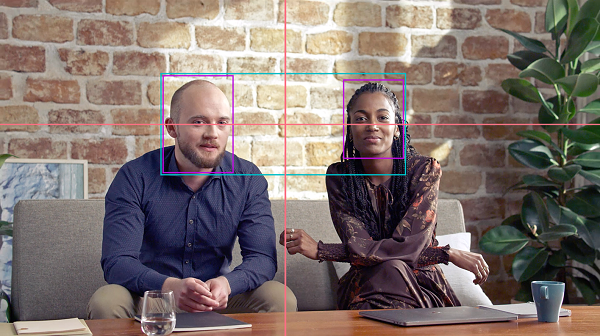

Adaptive Composition uses artificial intelligence (AI), transcoding, and audio levels to frame the faces of everyone in a video meeting so they appear at roughly the same size. Other video providers also do intelligent framing using facial recognition, but this is often done in the camera head or on the local endpoint so that while the person or group using that endpoint is framed properly, others in the meeting may not be.

Pexip’s Adaptive Composition is unique in that it frames everyone in the meeting properly regardless of the camera, client, or endpoint they use. Old systems work, systems without 4k cameras work, and even systems that have cameras with intelligent framing work with Adaptive Composition.

Pexip’s technology borrows concepts from compositional rule sets used in the film and TV industries to crop, zoom, pan, tilt, and center the people in a video image regardless of whether there is a single individual or a group and irrespective of which video device they use. Adaptive Composition auto-arranges the layout to position the largest groups in the meeting at the top of the combined image to ensure that every participant can be seen. More active groups and individuals rise to the top of their respective layout level.

The net result is that the people in a meeting room will appear to be at about the same size as an individual displayed in the gallery window. Of course, a caveat is that if the meeting room is deep, meaning that some people are close to the camera and others are far away, the image will be zoomed out to allow viewing of everyone in the image.

Under the Adaptive Composition Hood

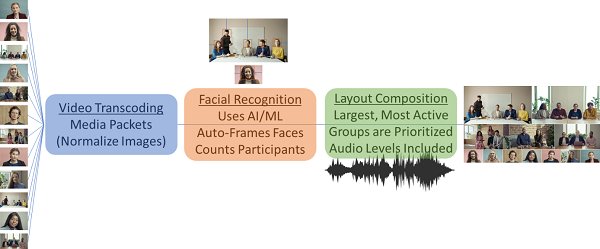

As mentioned above, Adaptive Composition combines AI, transcoding, and audio levels to place meeting participants in a visual layout that frames people properly. Here’s more detail on how it works.

The Infinity software features a neural network that examines every image sent to the Infinity server several times per second. The sampling frequency depends upon the motion detected in the image: If people are moving around a lot, it may sample more often. If the image is fairly stable, the neural network may examine the image less frequently.

Based on where the faces are in each image and the motion in the image, the Infinity software chooses a layout for each image. If the system detects multiple faces in an image, it will zoom out so that all faces are visible. If a lot of motion is detected, it zooms out until the motion stabilizes. When all of the individual images from each participating endpoint are composed into a single meeting image, those images with more people are configured at the top of the combined layout; individuals are typically located in the smaller gallery views at the bottom of the image.

As the Adaptive Composition technology constructs the combined image, the Infinity software also looks at who is speaking. Images “bubble up” when a person is speaking. Individuals may not make it to the top, but there can be some movement of an individual image in the final layout based on who the active speaker is.

There is some art and some heuristics involved when creating these layouts. For example, you wouldn’t want images in the layout to adjust and move around continually when the active speaker changes. That would be distracting. Likewise, you wouldn’t want an image in the layout continuously zooming in and out, based on someone’s movement, as that would annoy all the other participants. This is why Pexip looks at the motion in each image and zooms out, crops, or pans appropriately so that the images are not constantly shifting if there is some head wagging, lots of standing up and sitting down, or a significant amount of hand motion. As the motion in images decreases, the software zooms in appropriately. If motion in an image increases, the software slowly zooms out, but not so fast that it is distracting to the other attendees.

One of the complaints about other systems that have tried to do frame composition on the server side is the time it takes the system to react and properly display the frame. I recently observed another solution that does this, but it took about 10 to 20 seconds for the image to frame properly. Pexip overcomes this time lag by its continuous examination of all the images through efficient neural network/machine learning algorithms. All this processing does put some additional load on the server, but the performance of the solution is actually very good.

Conclusion

Adaptive Composition automatically frames and sizes the images of individuals and groups attending a video meeting, then intelligently arranges the layout to create a more natural, engaging meeting experience. It is built into the Pexip Infinity software and works with on-premises deployments, private cloud installations, and video-as-a-service cloud solutions.

Pexip claims that Adaptive Composition benefits users because the users no longer have to deal with manipulating the camera and with choosing video layouts, saving them time and angst as they try to have good video meetings. It uses AI, transcoding, and active speaker to create a layout that will be pleasing to most users without any user intervention. Adaptive Composition works with video endpoints connected to the meeting regardless of the camera used or the age of the video endpoint.