With more people working from home because of COVID-19, collaboration traffic has surged, often bringing with it session quality issues. It goes without saying; poor sessions are annoying, take too long, and are just frustrating. To learn how an enterprise can measure and evaluate their collaboration sessions quality, I contacted Jonathan Sass, head of product management at

Vyopta, for some answers. Vyopta generates operational and business insights to improve the user experience, increase adoption, and improve the ROI of an enterprise’s technology investments.

How has collaboration video traffic changed since this time last year?

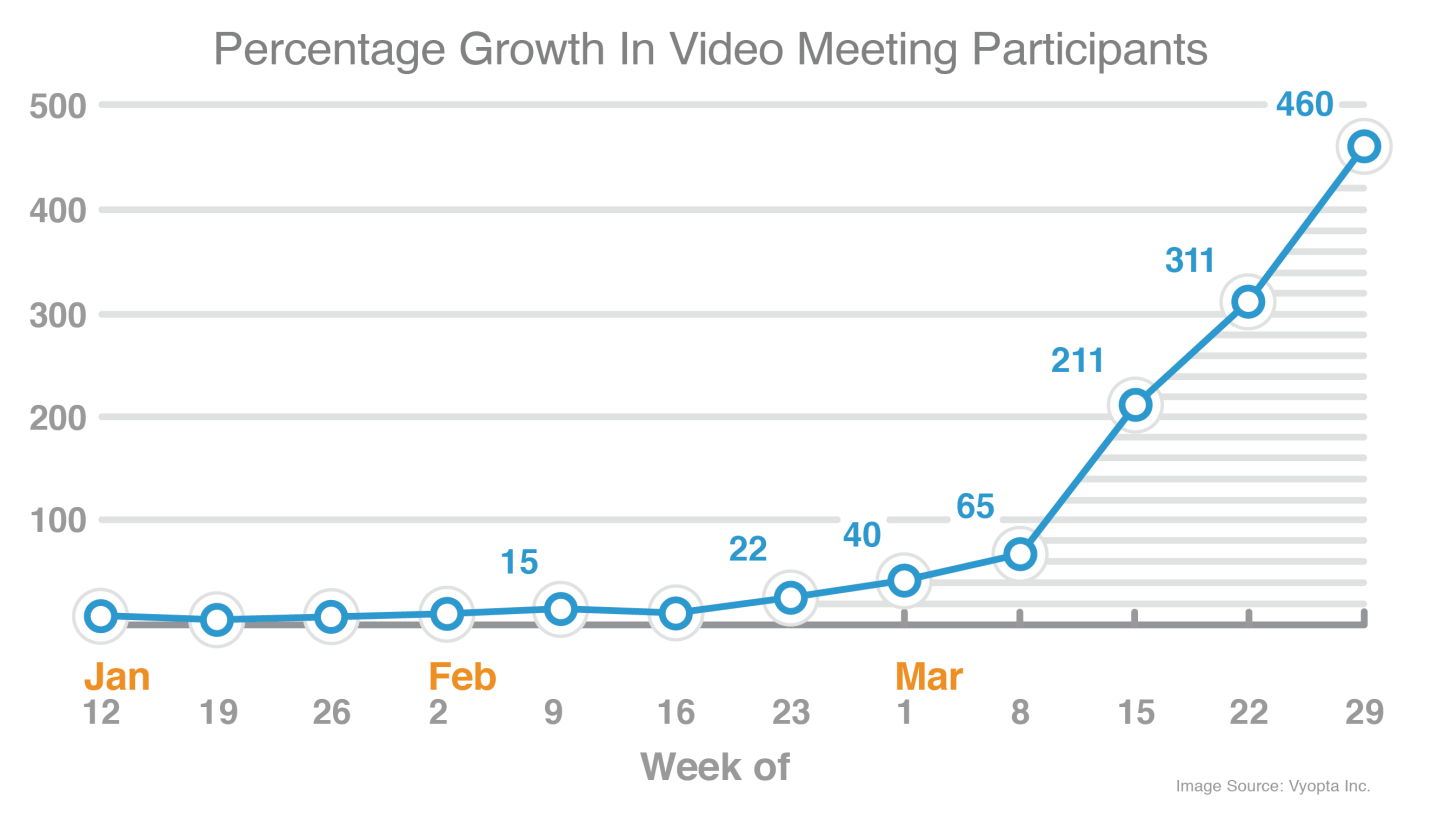

Previously, meeting usage at organizations was tripling every 24 months. Since the beginning of the pandemic, it has skyrocketed. Among our customer base, the total number of meeting participants has gone up by roughly 500% in a matter of weeks.

Are there any specific industries that are seeing a high level of collaboration traffic?

We have seen growth across all industries largely due to the fact that most employees have been forced to work remotely. However, two industries have seen fundamental changes in video meeting use cases, outside of remote work. Healthcare has been forced to accelerate telehealth programs to serve patients, introducing a large volume of video meetings that didn’t exist a year ago. Similarly, universities and K-12 organizations had to offer distance learning through video conferencing.

Has the growth in traffic impaired the user experience?

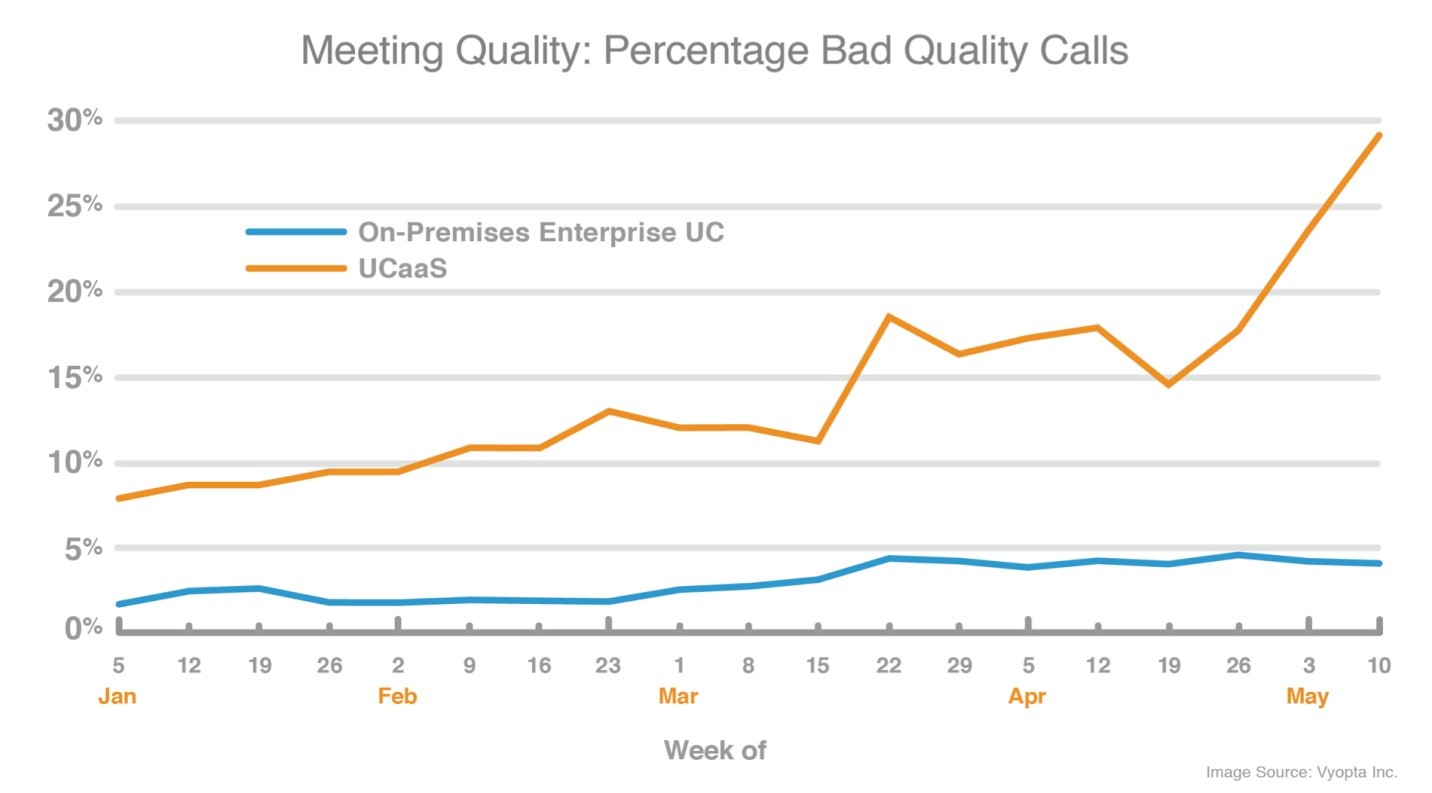

We have seen the quality of video meetings deteriorate during the pandemic period for users on both cloud and premises-based platforms. In addition, we are seeing evidence that call failures and abandonment (users having to dial back in) has increased over this timeframe as well. It can be seen that UCaaS experienced a more significant proportion of the bad quality call experiences for conferencing/meetings than on-premises technologies. The increase started in mid-March but has continued to increase through mid-May. This data on UCaaS aggregates data from multiple platforms, including Zoom, WebEx, and BlueJeans.

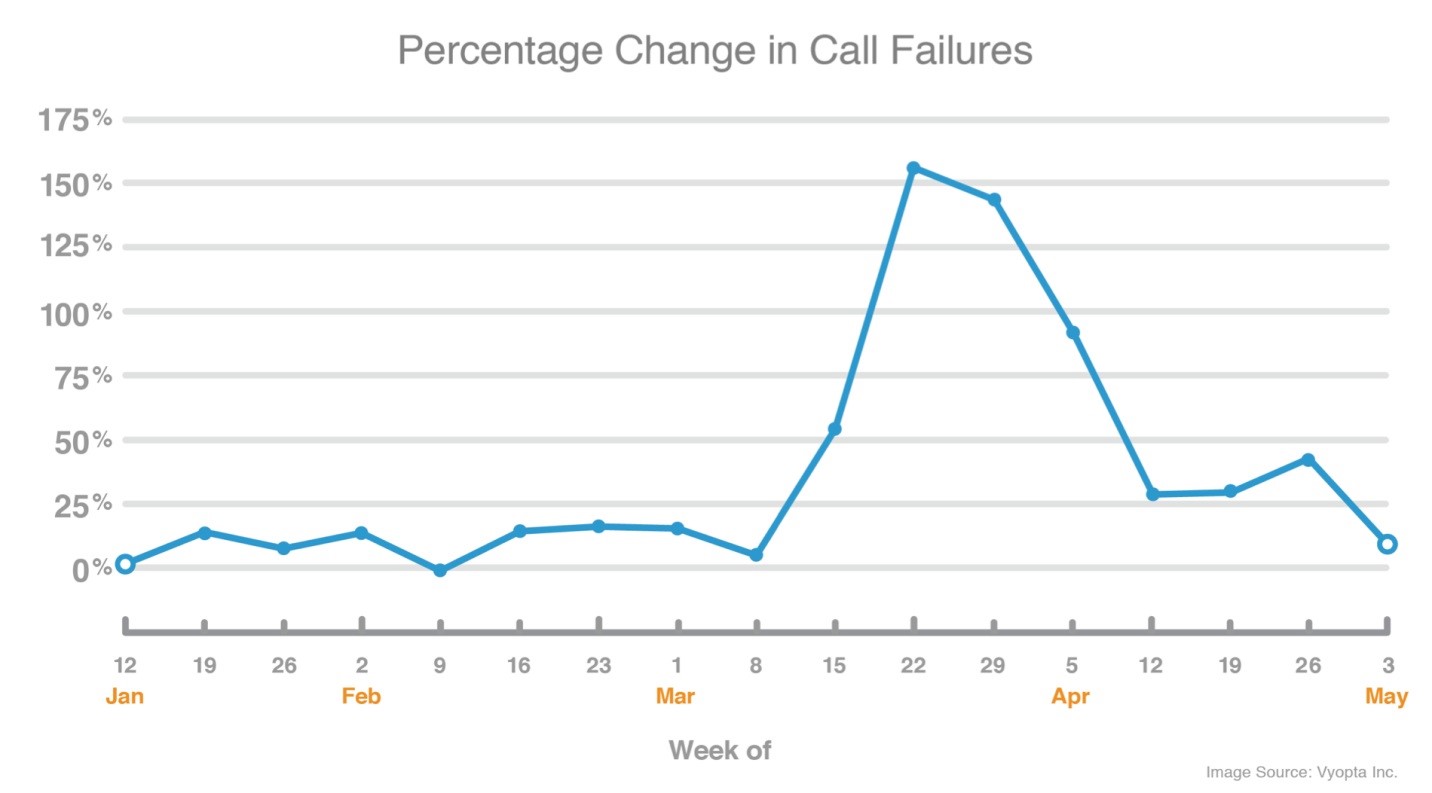

The call failure chart below illustrates the growing proportion of call disconnects/failures compared to the increase in meeting participants since January. The higher rates of call abandonment indicate that a call was dropped, or a user was disconnected, therefore had to call back into the conference.

The large increase in March in abandonment (failure) rates likely indicates that enterprises, UCaaS vendors, and telecom providers/services had issues keeping up with the needed capacity to sustain the large surge in users. Organizations, vendors, providers are taking steps to address these issues, but it’s not in that all-clear yet.

What about the size of the meetings? Since more people are in remote collaboration, are there more participants in meetings, and does that change collaboration performance?

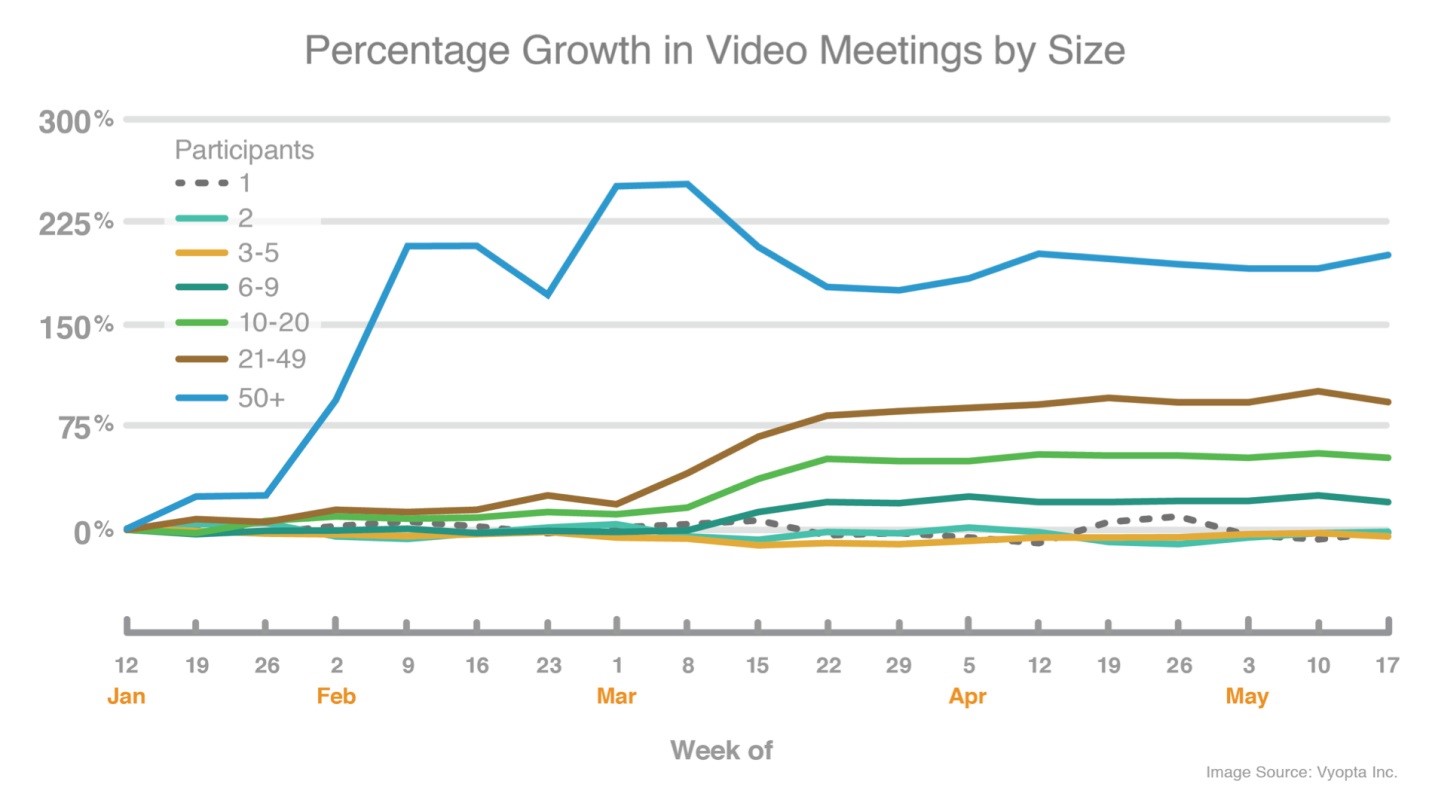

There has been a definite increase in large-sized meetings. While the overall breakdown of meetings by size remains proportionally similar to before COVID-19, there has been a significant increase in meetings of larger sizes. The number of meetings with six or more people has risen in number, meetings above 10 even more, and meetings above 21 even more. The largest jump is for meetings above 50 participants, which indicates large scale events and departmental and company meetings are being held online.

Has the traffic growth been mostly over the Internet? Has this changed the performance?

There has certainly been growth of on-premises deployments where end users securely connect back into corporate infrastructure, but by far, the majority of growth has shifted to the Internet. It has most definitely impacted performance. Most companies have a hard time isolating whether issues and quality degradations are due to the enterprise network, cloud services, the telecom service provider, or the users’ home network and setup.

Is more bandwidth the only answer to performance issues?

Bandwidth is certainly a factor. Internet service is quite expensive in the U.S., compared to other parts of the world, and since there are only a few providers, there is less competition. You do not get much for the price you pay. Download speeds are usually decent, but upload speeds, which is very important for UC traffic, aren’t.

In addition to the bandwidth concerns, most family homes have several people accessing the Internet at the same time, for multiple jobs, distance learning lessons, streaming services, etc. Several people in a house can quickly use up the download and the upload speed.

Many workers don’t have their own home office setup for remote work and frequent virtual meetings. Many users might have access to better hardware in video and audio devices at the office but are now left to whatever devices they may have at home which is likely not as good. Some headsets are better than others, but devices and peripherals have a tremendous impact on user experience and meeting productivity. Many people’s home Internet routers are years old and not often upgraded. If a router is many years old, it often will not be able to handle increased traffic. I recently discovered that my old router was cutting my Internet speeds in half.

Can computers influence the session quality?

Computers can also impact performance with much of the traffic moving to cloud providers, calls, and meetings happen via software. Old PCs can have problems running video and audio calls due to CPU and memory constraints, which can impact the quality of the call. Cloud provider capacity is also impacting performance. The increased load on cloud UC providers has caused performance issues that affect everyone.

How can a performance tool be used?

A performance management solution can be used by IT teams in multiple ways and generally helps them scale to support a large collaboration environment and a large set of users.

First, it can be used to proactively detect quality issues, performance degradation, and call failure issues. Having a unified solution that acts as a single pane of glass across all the technologies in an organization’s collaboration environment helps to more accurately detect and diagnose issues. Also, IT teams can leverage such a tool to troubleshoot issues with live and past calls and bring real data to collaborators to help accelerate resolution.

Second, a good performance management tool can also provide historical analysis that can provide business insights and help improve operational planning. For example, such analytics can help to identify whether an issue isn’t isolated, but rather a systemic, recurring one, along with identifying causes for corrective actions. Also, capacity metrics and information about total and peak concurrent usage needs can aid IT teams to intelligently throttle licensing, bridging, and trunk capacity. Information about how users are engaging with meetings and how they are joining audio across different departments and locations helps IT to set the right standards and user education, so that participants are prepared to have the best possible experience.

With regards to remote work scenarios for companies relying on premises-hosted UC, IT teams often have no visibility into quality metrics for remote users who join from their homes using software clients, phones, and endpoints that are registered to premises-based systems, making it difficult to diagnose whether issues lie with the telecom provider, the home network, or enterprise infrastructure. Increased traffic and concurrent usage also burden the edge of the enterprise-hosted UC network, which if not managed adequately, and can lead to capacity failures and users being unable to place calls. Vyopta provides complete visibility on status, availability, and quality passing through edge nodes such as expressways and session border controllers (SBCs), ultimately helping IT teams to improve UC experience and adoption amongst remote workers.

How does Vyopta measure the performance of collaboration sessions? Are there metrics you measure and use to calculate performance, and if so, how are the metrics analyzed?

Vyopta collects data directly from multiple elements along end-to-end call and meeting paths, whether they are deployed on-premises or in the cloud. These elements span enterprise infrastructure, edge nodes, cloud-based services, software clients and devices, and even trunks to external telecom providers. By amalgamating this data along a call’s path into a single unified platform (for example from the premises-registered endpoint, the edge expressway, and the call control and bridging platforms), Vyopta gathers different perspectives on the quality for a given meeting and thus provides a richer environment for troubleshooting as well as identifying recurring, systemic issues.

For a given call leg, Vyopta tracks raw quality metrics including latency, bitrate, frame rate, jitter, packet loss, and tracks each of these for transmit and receive connections for audio, video, and presentation share modes. Vyopta also looks at availability and status of devices. This data is normalized across the Vyopta interface, and a proprietary quality score is generated.

IT teams can then choose to be alerted on availability as well as performance degradations based on the quality score itself, the individual metrics, or a set of custom thresholds and parameters.