Customer experience (CX) professionals need to be vigilant when they hear promises like “your call center call recordings can be transcribed in real time and processed through text analytics to derive insights.” This refrain tends to be emphasized by CX platform vendors that rely exclusively on text analytics to process feedback from customers. Sounds reasonable, right?

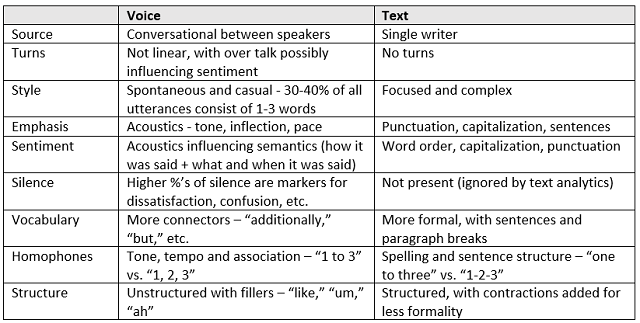

The issue is that voice communication is dramatically different from text-based communication. If you go down the voice transcript to text analytics route, your results will be less than adequate. The primary reason is that it’s not simply about what was spoken. Rather, it’s how you say it, when you speak it. Even what you might not be saying within a dialogue will influence intent and sentiment within voice communication!

The following will educate CX pros with the information needed to understand why voice is fundamentally different from text. This awareness will enable you to effectively take advantage of the voices of your customers and agents to drive CX enhancements.

We Don’t Talk and Write the Same Way

In 2015, cognitive scientists at Johns Hopkins University

discovered that writing and speaking are supported by different parts of the brain. This impacts not just the motor control for writing and how you form words when you speak, but also word and phrase construction. Some examples of the differences include:

Text analytics resources, such as what is frequently available from many CX platforms, have been trained on text data. As such, they were exclusively built with the structure of that data in mind (punctuation, single speaker, third-person formal, spell-checked text).

Speech analytics, on the other hand, has been trained on data derived from the different part of the brain, as noted above, to consider spoken dialogue. Speech analytics is tuned for the unstructured nature of voice communication. In addition, acoustics add another dimension to provide contextual accuracy with meaning emphasis.

Speech analytics solutions are designed to handle the nuances of voice-based conversations. This has an enormous impact on the ability to surface insight that matters from this unstructured data. For example, predefined or user configured searches and classifications can more accurately identify topics, behaviors, procedures, and other events in spoken conversations. Different styles, connector words, fillers/silence, and disjointed flow are “meaning influencers” that text analytics systems relying on structure would likely miss.

Search parameters allow for the concept of time, which is not available in text-based communication. And classifications extend substantially beyond keyword spotting, taking into account the myriad ways that concepts, such as escalations or dissatisfaction, are expressed in speech.

The bottom line is that applying a voice transcription to text analytics workflow to accurately capture the voice of your customer and voice of your agents will not work well for CX pros. Results will be far less contextually relevant, making it difficult to gauge loyalty or sentiment meaning. A more insidious concern is sending your root cause analysis in the wrong direction by completely missing a key element of unsolicited feedback or being mis-directed by a false positive.