High network latency is the number one performance problem when moving from a premises- to a cloud-based solution. Enterprises should target one-way network latencies of <25ms for each leg of the call -- first, from caller to UC/CC platform and second, from platform to second caller or agent. Distributed hosting and getting rid of network backhauling will keep the UC/CC cloud performing as desired. While keeping users within 25ms of their UC/CC cloud service is not always possible, it should be the design objective.

Why less than 25ms? Here's the logic:

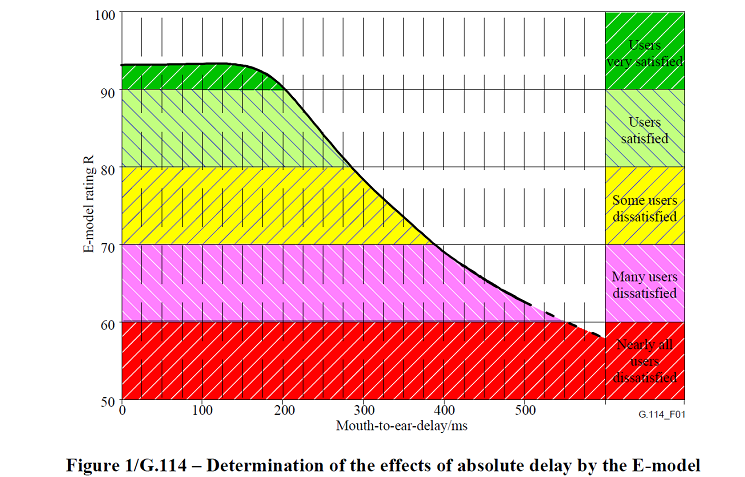

1) For a robust communications experience, the ITU-T G.114 recommends a one-way delay of 150ms or less from the talker’s mouth to the listener’s ear, not just the network delay.

2) Using VoIP adds 60 to 80ms of delay (serialization, buffer)

3) Cellular phones add additional latencies, typically from 40 to 80ms

4) Security stacks add 20 to 60ms of delay (the worst part of this is that the delay can be variable -- jitter, which we at No Jitter know is evil)

5) Network delays based on location of callers and the UC/CC platform they are going through, typically 10-25ms within the lower 48.

Network delay of 25ms equates to roughly 2,500 miles by fiber. (Fun fact of the day: Light travels approximately 31% slower in fiber than in space.) So, a cloud UC/CC platform hosted in Omaha, Neb., can serve both U.S. lower 48 state callers and agents well. But if the platform is in Miami, Fla., and the callers and agents are in Seattle, Wash., we exceed our latency budget.

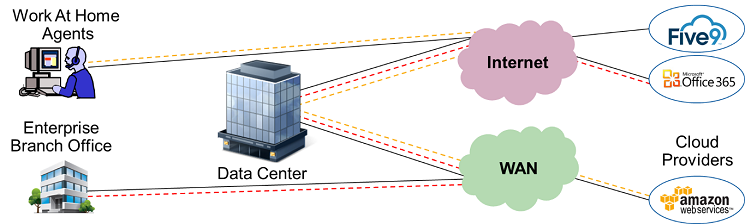

This gets especially problematic when callers, UC/CC platforms, and agents are on different continents or a lot of backhauling is going on within a network. Backhauling is when a network path is the predefined logical path, not the shortest physical path. This primarily occurs in enterprise networks for private-to-public internetworking, where Internet-destined traffic must be backhauled to an enterprise data center, through a security stack, then out to the Internet.

My recommended best practices are as follows.

Best Practice #1: Regionalize the UC/CC hosting platform. This may sound obvious, but many a cloud UC/CC provider requires all traffic to go through a single center with a second site available for disaster recovery. Conferencing services are terrible about doing this. Enterprises should specify the 25ms requirement in their request for proposals from cloud providers. The market challenge here is that the best-of-breed UC/CC solutions vary by region, as evidenced in that the latest Gartner Magic Quadrants for contact center providers in North America and EMEA have few of the same vendors in them.

Best Practice #2: Directly connect agents to the cloud UC/CC provider. This can happen in two ways:

1) For users or work-at-home (WAH) agents, put in a next-generation router (SD-WAN light) and allow a split tunnel through which they can go directly to the UC/CC cloud hosting network. The network security rules for directly accessing the Internet from home versus going back to the data center via VPN are:

- Whitelist -- A set of sites that the WAN agent is allowed to access directly

- TLS -- Ensure all whitelist applications are TLS-encrypted and validate the certificate in the setup process

- Directionality -- Ensure that only TCP/UDP sessions are originated from the WAH agent, not from the Internet.

- VPN -- All traffic that doesn’t meet the three rules above goes back to a data center (public or private), and if it destined for the Internet, it goes through a full security stack.

2) For UC/CC users at an enterprise site, use MPLS carrier cloud interconnects to connect directly to the UC/CC provider. The number of private interconnects of UC/CC cloud providers is growing, and redundancy is already built into these. If you’re with a really large organization, you can leverage carrier-neutral co-location facilities to build your own interconnects.

Personally, I find delays in communication very frustrating. Since I’m usually in a hurry, I will step on a conversation and do not let the other person finish their sentence. Get another person like me on the phone, and we both will step on one another. We both will start talking at the same time, stop at the same time, and finally one of us will have to let the other start and finish. While this maybe OK in my personal life, it is not OK in business communication.