How Dialogflow Works

Dialogflow, known as

API.ai prior to its acquisition by Google in September 2016, is Google’s cloud-based natural language understanding (NLU) solution. It uses machine learning techniques to enable computers to “understand” the structure and meaning of human language.

Dialogflow is available as a standalone solution at

www.dialogflow.com or as part of CCAI under the name of Virtual Agent. Virtual Agent is essentially Dialogflow with some special APIs developed for contact center-based call flows and interactions.

CCAI and/or Dialogflow include the following capabilities:

Dialogflow as a standalone product is generally available and supported in two product editions: standard and enterprise. The enterprise edition has a higher SLA and comes with more robust tech support than the standard version. As announced at Google Cloud Next last month, CCAI is in beta release. Although the general release date has not been announced, industry watchers expect CCAI to be generally available in the second half of 2019. As the table above shows, several parts of CCAI, including Agent Assist and Conversational Topic Modeler are currently in alpha release. Beta customers of CCAI partners currently have access to the special contact center-specific APIs, which remain subject to modification and tweaking until CCAI becomes generally available.

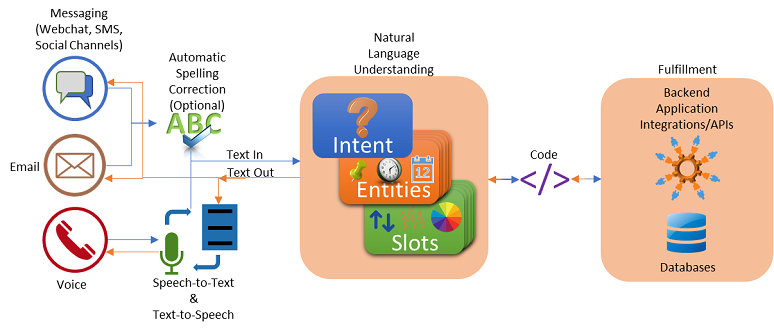

The figure above diagrams how Dialogflow works.

1. Inputs to Dialogflow can be text-based or human voice in

20 different languages, including some with local vernaculars such as English (U.S. and Great Britain) or Chinese (simplified and traditional). Text input comes from messaging channels, including SMS, Webchat, and email. Google has built Dialogflow integrations with Slack, Facebook Messenger, Google Assistant, Twitter, Microsoft Skype and Skype for Business, Cisco Webex Teams, Twilio, Viber, Line, Telegram, and Kik. SMS, email, and voice assistants from Amazon and Microsoft require additional coding or message massaging for input into Dialogflow.

2. Text-based messages can pass through an optional spell checker. When people type or use SMS, misspelling is common. Correcting misspellings will improve the accuracy of the natural language understanding engine.

4. Once voice has been converted to text or the input text has been corrected, the resulting text stream is passed to the natural language understanding engine within Dialogflow. This is really the core element that enables intelligent bot creation.

Dialogflow first examines the text stream and tries to figure out the user’s intent. The intent is what the user wants -- why he or she is interfacing with the bot in the first place. Getting the intent right is critical. (We’ll discuss how to create Dialogflow intents in a subsequent article.)

Examples of intents can be things like “I want to know the weather forecast,” “what’s my bank balance,” and “I want to make a reservation.”

Intents will typically have entities, such as name, date, and location, associated with them. If you want to know the weather, you need to tell the bot the location. If you want to know your bank balance, then the bot will need to get the name on the account. Some

like date, time, location, and currency are available out of the box and are enabled by as part of Google’s technology stack.

Dialogflow supports the notion of

contexts to manage conversation state, flow, and branching. Contexts are used to keep track of a conversation’s state, and they influence what intents are matched while directing the conversation based on a user’s previous responses. Contexts help make an interaction feel natural and real.

Slots are parameters associated with the entity. In the bank balance intent example, the entity is the person’s name. A slot might be the specific account number under the person’s name for which the balance is being sought. If you’re reserving an airline ticket, your seat preferences (window or aisle) are slot values.

Creating intelligent bots using Dialogflow requires the developers to consider all the intents the bot is expected to handle and all the different ways users will articulate this intent. Then, for each intent, developers must identify the entities associated with that intent, and any slots related to each entity. If an entity and/or slot of an entity is missing from a query, the bot needs to figure out how to ask the user for it.

5. Once Dialogflow has identified the intent, entities, and slot values, it hands off this information to software code that will fulfill the intent. Fulfilling the intent might include doing a database retrieval to find the information the user is looking for or invoking some kind of API to a backend or cloud-based system. For example, if the user asks for banking information, this code will interface to a banking application. If the intent is for HR policy information, the code triggers a database search to retrieve the requested information.

6. Once it is retrieved, the necessary information is passed back through Dialogflow and returned to the user. If the interaction was text-based, a text response is sent back to the user in the same channel that was invoked to send the message. If it was a voice request, the text is converted to speech and a voice responds to the user with the requested information.

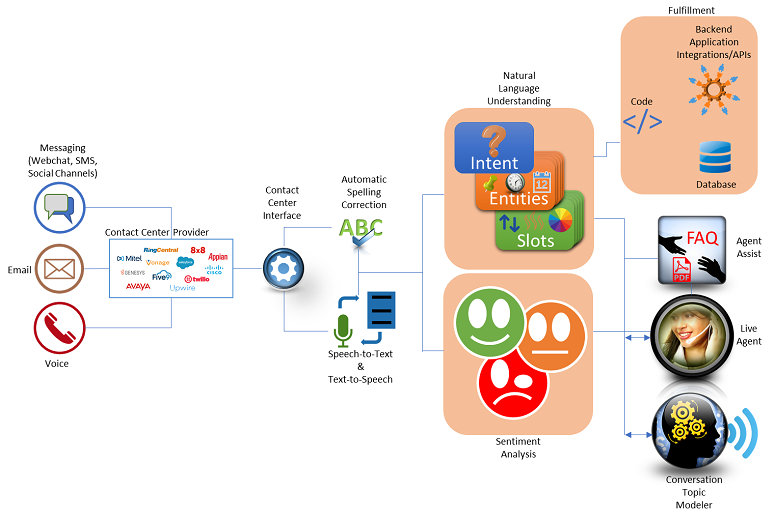

When Dialogflow is integrated as part of CCAI through one of the contact center partners, the flows become a bit more complex but even more powerful.

CCAI adds several notable enhancements:

- Escalation of a query to a contact center agent

- Real-time sentiment analysis -- positive, negative, neutral -- of the text stream that can be surfaced in the agent’s desktop

- Agent Assist, surfacing FAQs, PDFs, Web pages, and other documents to a live agent

- Conversation topic modeling, so that the quality of interactions can be enhanced (meaning that more inquiries are handled by the intelligent bot), and this modeling can detect if intents are changing over time so that additional work can be done on keeping the bot current.

Click below to continue to Page 3: Proof Point