Conversational AI lets humans talk to computers, in the form of a website chatbot, a voice assistant, or another messaging-enabled interface. The technology is constantly evolving to help different industries — from healthcare to retail to financial services — automate customer support and provide supplemental services. Conversational AI is now finding even more use cases, as it advances to communicate with humans using facial expression and contextual awareness.

Contact centers are among the biggest users of conversational AI, as the technology enables them to provide consumers with a similar experience to talking or chatting with a human even though they’re conversing with a machine. If used correctly, it can take a tremendous load off of human agents. Typically, contact centers use conversational AI for high-frequency, low-complexity interactions such as store hour and password reset requests. The COVID-19 pandemic has crushed many contact centers with inbound calls and put pressure on organizations to accelerate their conversational AI plans.

Market Momentum

The global conversational AI platform market size could exceed $17 billion by 2025, increasing at a compound annual growth rate of approximately 30% from 2020 to 2025, according to a

forecast published by Adroit Market Research in February, ahead of the mass COVID-19 shutdown. North America was the leader of the global conversational AI platform market in 2019, and is expected to dominate the market between 2020 and 2025, according to the forecast.

COVID-19 will certainly push the forecasted numbers up. Conversational AI-based chatbots are providing customer support when call centers can’t operate at full capacity due to social distancing. This is allowing companies to continue serving their customers without interruption. Some reports indicate call centers are able to scale down from 300 to 50 agents with the assistance of chatbots, which can address routine customer inquiries as effectively as human agents. Alternatively, organizations can leave contact center agents in place but provide more personalized customer service. The key is, conversational AI lets businesses tune their contact centers to whatever type of support model they desire.

COVID-19 has uncovered other challenges in industries like healthcare, further revolutionizing conversational AI. From delivering healthcare services to answering pressing questions about the pandemic, the technology is proving to be invaluable in situations where people can’t physically be present to solve problems.

Jarvis at Your Service

Beyond the current crisis, conversational AI could take customer relationship management (CRM) to a whole new level. Whether for business-to-consumer, business-to-business, or consumer-to-business use cases, the need for human-like interaction coupled with a personalized, data-driven experience is growing.

One of the companies working on enabling more data-rich, human-like interactions is Nvidia, known primarily as a maker of graphics processing units (GPUs). Nvidia recently announced Jarvis, a GPU-accelerated application framework that allows companies to use video and speech data to build conversational AI services — such as 3D chatbots — customized for their specific industry requirements. Jarvis, a turnkey solution, saves them the trouble of having to go through the process of building, training, and refining conversational AI models — heavy-lifting that has often dissuaded companies from moving ahead with the technology.

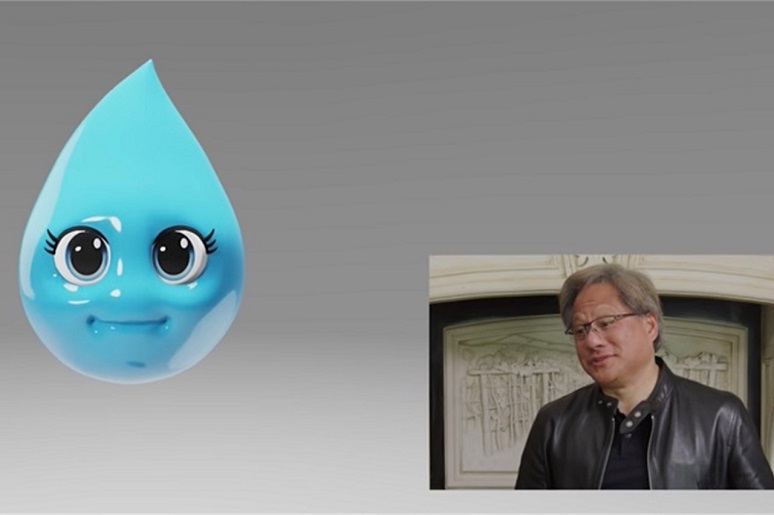

Conversational AI has come a long way, with recent breakthroughs in automatic speech recognition, natural language understanding (NLU), and text-to-speech synthesis. Jarvis makes it possible to build interactive, low-latency conversational AI services that have been trained with a great deal of data, complex computation, and graphics generated in just a few hundred milliseconds. All those elements are necessary for people to feel like they’re having an interactive conversation with a chatbot like Misty, an animated raindrop with realistic facial expressions, showcased by Nvidia CEO Jensen Huang during his

virtual keynote at the company’s annual GPU Technology conference, which this year took place digitally.

Getting to Misty

Nvidia created Misty with Jarvis, which utilizes the company’s new A100 tensor core GPU for AI computing and optimizations in TensorRT, a Nvidia SDK for high-performance deep learning inference. By fusing vision, audio, and other sensor inputs simultaneously, Misty can have multi-context conversations with people. Some early adopters of Jarvis developed apps that can understand the full intent of a customer’s spoken conversation, recognize industry-specific speech, and assist customers with booking and canceling appointments.

Any company can use the Jarvis GPU-accelerated software stack and tools to create, deploy, and run conversational AI apps or services. Instead of building from scratch, developers fine-tune Jarvis models on their data to build services that run in under 300 milliseconds, compared to more than 20 seconds on CPU-only platforms.

Developers can tweak conversational AI models and train them with the Nvidia Neural Modules (NeMo) open source toolkit. The models can be trained on data (and vocabulary) that is unique to a product, customer base, or business. Finally, deploying Jarvis is as simple as executing a single command to download, set up, and run the entire application. Alternatively, individual services, such as speech, NLU, and others, can be deployed as software packages known as Helm charts within Kubernetes clusters.

Advancements in conversational AI are making it possible for companies to build custom services across different industries, and not just to automate customer support. Nvidia’s Huang said he envisions a next-generation videoconferencing app where multiple people can speak at the same time, with closed captioning and a transcript of the conversation generated in real-time. That will happen not too far in the future, Huang assured.