In my Decoding Dialogflow

series on intelligent virtual assistants (IVAs), I have occasionally referenced the technical solutions created by Interactions, a conversational AI company that leads the market in the development and deployment of commercially successful IVAs. Interactions generates over $100 million in annual revenues through a combination of rich technology, turnkey IVA development services, and success-based pricing. This article details why Interactions’ technology works so well, and it provides a proof point from Constant Contact, an Interactions customer with a highvelocity contact center.

Who Is Interactions?

Interactions was founded in 2004. Since then, the company has grown to 500 employees and, according to

Crunchbase, has had 11 funding rounds generating a total of approximately $163 million. In 2014, Interactions acquired the AT&T Watson speech and language engine and its data science and AI support team, to drive a new wave of speech- and text-enabled conversational solutions for enterprises. In this transaction, AT&T received an equity stake in Interactions. (Note: AT&T Watson has no relationship with IBM Watson.)

Combining the AT&T Watson AI technology with its own patented Human Assisted Understanding technology, Interactions has created an advanced cloud-based customer care solution that provides IVAs to mid-sized and large enterprises. Interactions’ platform also can deliver voice biometrics as an add-on to an IVA.

Bespoke IVAs with Success-Based Pricing

Interactions employs conversational AI designers and developers who design, train, deploy, and maintain its clients’ IVAs. Consequently, Interactions clients do not need to employ or contract with any AI specialists or programmers. Rather, Interactions’ Client Success team will help design an optimized customer experience and monitor model performance and customer outcomes.

Success-based pricing is a key differentiator between Interactions and many of its competitors. The company charges a professional services fee for initial IVA development, but once the IVA is deployed, it does not charge clients for ongoing maintenance, platform upgrades, reporting, etc. All of Interactions’ revenue following initial development comes only when an IVA successfully completes a customer engagement task. The nature and granularity of these tasks will vary from industry to industry and from client to client, based on each individual client’s business objectives and desired outcomes. Examples of successfully completed IVA tasks may include things like providing directions, answering questions, qualifying prospects, or obtaining information for a subsequent fulfillment process.

How Interactions’ Adaptive Understanding Works

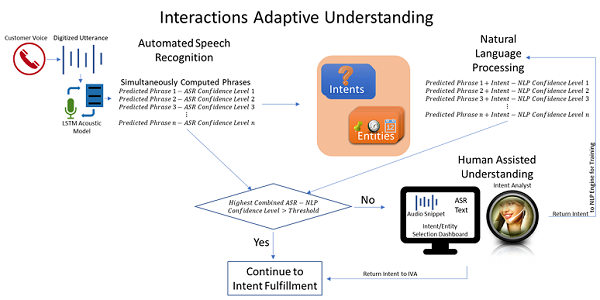

Interactions developed and commercialized a patented and branded technique called Adaptive Understanding. Adaptive Understanding blends conversational AI with human understanding in real-time to deliver truly conversational experiences across all voice- and text-based customer care channels. I will describe how Adaptive Understanding works by breaking the IVA process down into four steps.

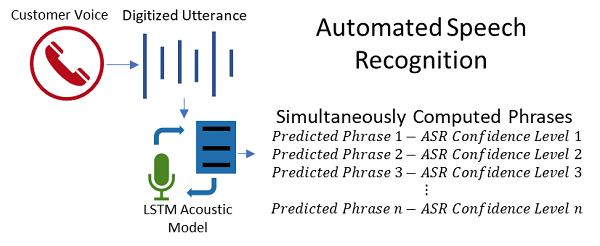

Step 1: Understanding the speech via automated speech recognition (ASR)

When a customer calls into a contact center, the first major AI function that comes into play is speech-to-text (STT) transcription. ASR only applies to voice calls; it is not needed for text-based customer engagement such as SMS, webchat, messaging, etc.

The Interactions ASR engine uses neural network-based acoustic modeling techniques, feedforward and long short-term memory (LSTM), to convert speech to text. In machine learning, LSTM is a recurrent neural network architecture that works well for certain types of sequential data. Speech is one of these sequential data types because words and phrases at a particular point in an utterance relate to and depend upon what preceded them and what may follow them. You can think of feedforward and LSTM as mechanisms that allow the neural network to sort of look backward or forward to try to more accurately predict the text of what a person has said.

The output of a recurrent neural network is not a single phrase, per se. Rather, the neural network simultaneously generates a series of possible phrases ranked by a numerical confidence level that indicates how well the ASR has converted the speech to text.

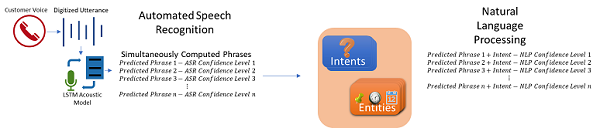

Step 2: Natural language processing

I’ve gone to this level of detail describing how an ASR works because the multiple phrases the ASR generates are key inputs to the next major IVA functional block: natural language processing (NLP).

As discussed in

previous No Jitter articles, IVAs are constructed in part using a series of training phrases associated with the reasons why a person needing customer service may call into the contact center. These training phrases reflect specific customer needs, or “intents.” Customer intents have associated qualifiers, or “entities,” that provide specific information about an intent. An entity, for example, may be a bank account number, a street name, an email address, a date, a time, a list of ingredients, or any other discrete piece of information that qualifies and makes the customer’s intent more specific.

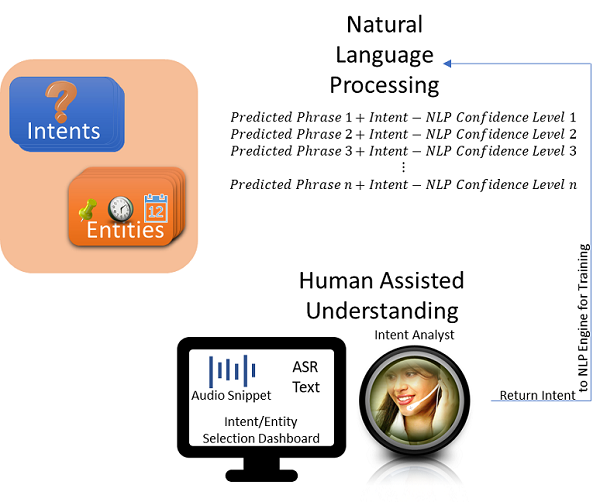

The Interactions ASR generates and sends multiple text phrases simultaneously to the NLP engine. Each phrase represents a possible utterance by the customer. The NLP engine examines each phrase, associates it with one or more possible intents, and gives each phrase-intent pair a confidence level that indicates how strongly it believes the phrase matches a particular intent.

Step 3: Adaptive Understanding: Seamlessly combining conversational AI with human understanding

When determining a final overall confidence level, the IVA examines the confidence level for a phrase’s text obtained from the ASR engine with the confidence level of the intent prediction obtained from the NLP engine. When these combined ASR/NLP confidence levels for the customer utterances surpass a predetermined confidence level threshold, the system chooses the intent with the highest confidence level and moves on to fulfilling that intent.

In most IVAs, there are four possible outcomes should none of the confidence levels surpass the threshold:

- The IVA can repeat a prompt, possibly using different words, inviting the customer to repeat what they want.

- The IVA can transfer the user to a live agent based on business rules.

- The IVA can simply start over (this is called the fallback intent).

- The IVA may just say it is unable to help the person and disconnect the call (which is a pretty lousy IVA, frankly).

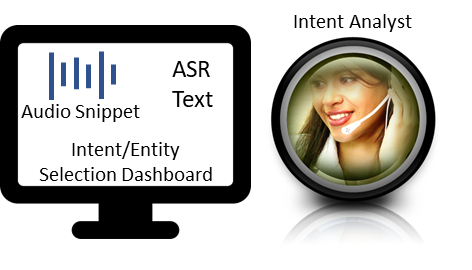

With an Interactions IVA, when the NLP engine is not sufficiently confident that it has identified the right intent and/or if it cannot accurately determine what the value of a particular entity may be, the IVA automatically routes a short snippet of audio and the ASR text to an “intent analyst” — a real person who listens to the audio, reviews the text, and determines the caller’s intent in real-time

Intent analysts work in a kind of contact center. As with traditional contact center agents, intent analysts receive conversational snippets based on their skill sets. Skills include the languages the intent analyst understands along with other speech recognition abilities the analyst may have. These may include an aptitude for rapidly identifying certain types of entity information that can be hard for an IVA to get right, such as recognizing numerical digits, proper names, and street or email addresses. Such skills, for example, would be helpful for an IVA designed to gather information about a person’s need for emergency automobile assistance in Italy.

Once the intent analyst determines the correct intent or entity values, they immediately return control to the IVA so that it can continue to execute its business logic. I should point out that intent analysts never engage directly with customers — their responses are always returned directly to the IVA.

Interactions outsources intent analyst personnel to firms around the world including in the U.S., to support client security requirements as necessary.

Step 4: Constantly improving IVA performance

When an intent analyst tags an utterance with the proper intent or, perhaps, identifies an entity value, the tagged data is then leveraged in retraining the IVA’s ASR and NLP machine learning models to advance successful automation in future interactions.

How Interactions Builds Its IVAs So Quickly

Interactions creates an end-to-end customer journey from pre-IVA to IVA to agent. The company has deep experience integrating telephony with back-office applications.

IVA application design focuses on the best way to facilitate productive and efficient conversational dialogs with meaningful outcomes. Interactions has a unique way of building IVAs based on actual customer data. IVA automation starts with generic AI models containing entity information (numbers, dates, etc.) and general-purpose recognition models with large vocabularies that are tuned for the domain using domain-specific words and information. It then deploys the NLP models.

When an Interactions IVA is installed, it will initially route a large number of audio snippets to the intent analysts for tagging and feeding back into the machine learning models. In this way, the IVA model constantly gets better and better at determining the proper intents and the right entity values, lessening the need for an utterance to go to an intent analyst. This process of Adaptive Understanding reduces the number of utterances needed to get to high levels of IVA accuracy and the time required to develop the IVA model.

Interactions asserts that it can typically have an IVA up and running, identifying the right intents at 80% or better accuracy, within 90 days, depending upon the call volume. Adaptive Understanding not only speeds the development of an IVA model, it does so in a way that doesn’t compromise customer experience — intent analysts’ real-time determination of intents builds the model while delivering a truly human-like experience. This is a remarkable feat!

Proof Point

Interactions’ largest customer handled approximately 300 million calls last year using the IVA. I haven’t spoken personally to this customer, but I did have an opportunity to speak with another customer, Constant Contact, a company specializing in email marketing, event management, messaging, and social media marketing. The Interactions IVA handles an average of 40,000 to 50,000 calls for Constant Contact per month, Ben Bauks, senior business systems analyst at Constant Contact, told me.

Constant Contact deployed its IVA in 2016 as a way to qualify prospects before passing callers to live agents. This IVA presently fronts an Avaya contact center. Constant Contact identifies and routes callers to the IVA so that live agents can focus on the more difficult tasks while simultaneously saving the customer’s time. With this IVA, Constant Contact can identify the caller’s intent in the customer’s own words, making it a very effective engagement interface. At last count, Constant Contact’s IVA supports approximately 1,300 intents and entities and has an 80% to 85% success rate for properly authenticating and qualifying callers, Bauks said. With this screening in place, live agents save between 15 and 30 seconds per call, he added.

Bauks validates the efficacy of the Interactions IVA by looking at several key metrics. When a person calls into Constant Contact, a record is created via an integration between Interactions and Salesforce. When the live agent gets the record, one of the required boxes the agent must check is whether Interactions got the caller’s intent correct. By using this Salesforce data, Constant Contact can assess the effectiveness of the Interactions IVA for properly capturing the reason for the call. This is where the 80% success metric comes from. Another statistic Bauks looks at is “error events,” a statistic provided by Interactions. In 2019, the error event rate was less than 1%, meaning that “the system” performed with 99% reliability throughout the entire year.

Conclusion

Interactions has developed an impressive IVA solution designed to rapidly deliver productive and efficient automated customer engagement. Combining AI with human understanding — Adaptive Understanding — is built into every Interactions IVA. The fact that Interactions IVAs are generating over $100 million based on completed IVA tasks validates that these IVAs are working.

Interactions has a clear lead with respect to revenues in this new world of IVAs that enable more customer self-service while saving money for Interactions clients. Many other companies have entered this burgeoning IVA market and will be covered in subsequent posts. I will also be discussing conversational AI with leading vendors at Enterprise Connect 2020 in a panel session titled, “

Conversational AI: Using Messaging, Speech, and Chat to Automate Customer Engagement.” The session takes place on Thursday, April 2, at 9 :00 a.m. Join me there!

Attend Enterprise Connect 2020 at the best rate! The Advance Rate expires this Friday, Jan. 31; register now, and use the code NOJITTER to save an extra $200 at checkout.