Can machine learning improve media quality more than the people who develop media algorithms?

Sure it can. Even if engineers don’t believe it yet.

I’ll start with an explanation from another domain -- the one of games. Last year, a computer game did the unattainable. It won the game Go when playing against any human or machine program put in front of it. The thing is… it “taught” itself to play the game. As explained on DeepMind:

Previous versions of AlphaGo initially trained on thousands of human amateur and professional games to learn how to play Go. AlphaGo Zero skips this step and learns to play simply by playing games against itself, starting from completely random play.

By being able to classify what’s considered a “good” game (=it won that round) and what is a “bad” game (=it lost that round) it was able to improve over a (very) short period of time.

Can the same be done to optimize real-time communications (RTC)? I think so.

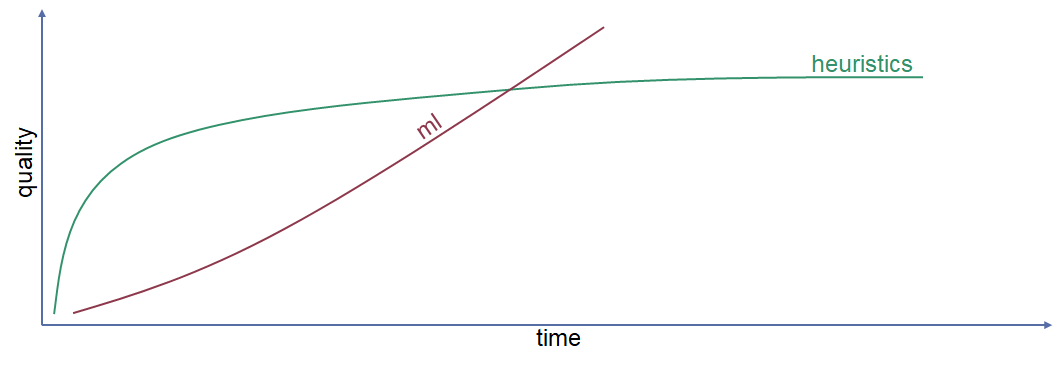

Here’s how I see the world today:

The X axis denotes the passage of time. The Y axis shows the level of quality that we can reach as an industry. We’ve been going along that green line of heuristics for the last 20 years or more, improving each and every aspect of real-time communications: I remember dealing with encoder compression heuristics to squeeze more juice from the available bitrate, different algorithms dealing with bandwidth estimation, packet loss concealment, and a slew of other algorithms. I remember reducing the noise of keyboards in a call.

Heuristics means that we have been doing this manually for the last 20 years. A media expert sits down, makes an educated decision on what rules to add into the code to his algorithms to make the media quality higher. It can be deciding how much available bandwidth there is based on the packet loss reported on the network, or what filter to use over the audio coming from the mic to get rid of background noises. It can be a gazillion of other reasons and aspects. The problem here is that we got so good at doing it manually, with media experts, that we don’t see this as the dead-end that it is.

In recent years, a new trend has emerged, one of machine learning. That’s the red line. It’s a concept in which vendors are looking to use machine learning to build models they can use instead of heuristics. The first place we see it today is noise suppression. But we’ll be seeing it everywhere.

The main challenge here is that this isn’t thrilling. The value it brings is quality, which is subjective in nature and hard to quantify. It doesn’t add new features, such as silly hats with computer vision or automated speech interactions like Amazon Alexa does with voicebots (as we’ve previously written about previously in this series).

That said, using machine learning to optimize media quality is going to be critical for vendors moving forward. That’s because when machine learning algorithms improve quality enough, it will become a differentiator as opposed to being another checkmark in a long list of features. If you can handle a video call at twice the resolution over the same network and devices, you win. If you can get audible and understandable conversation over a choppy network while your competitors can’t, you win.

Want to see more on real-time communications quality optimization using machine learning? Interested in how machine learning, computer vision, and voicebots fit into your strategy? Check out our report on AI in real-time communications or visit our AI in RTC event in San Francisco on Nov. 16. And see our earlier posts in this ongoing series: